In the DAGGER algorithm, how does one determine the number of samples required for one iteration of the training loop?

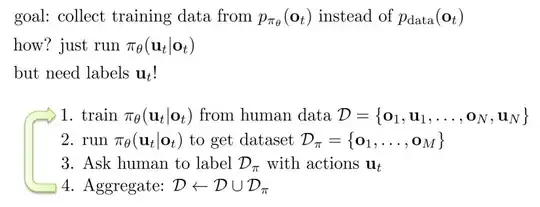

Looking at the picture above, I understand initially, during the 1st iteration, the dataset D comes from pre-recorded samples and is very large (in the tens of thousands).

however, i'm confused about step 2. How large should Dπ be?

This post explains that it is good idea to "anneal out" D0 during the subsequent iterations, which I think means having two separate datasets, and sample proportionally from each during step 1 (which implies in step 4, it is Dπ that is being aggregated, not D), in that case, is there a maximum size for Dπ?

I've already looked at What does the number of required expert demonstrations in Imitation Learning depend on?, which seems to explain Dπ can be determined using a formula. However, the formula involves terms such as discount factor γ and various other terms which I don't think applies in this case. The paper I'm trying to implement is End-to-end Driving via Conditional Imitation Learning

Thanks for any help!