A little bit of context. I have a classification algorithm based on mathematical discriminator and I am not applying any machine learning or AI technique, just moving window and several relative comparisons. This is a signal segmentation problem, where I need to detect three states based on their mathematical properties or morphology. In addition, I need to perform this with minimum delay and almost real-time.

The output of my algorithm is a labelled signal with the different sections of it labelleded depending on those mathematical descriptors. The reference model was labelled by professionals that tagged the data for me and I am in charge of creating an automatic labelling tool for it.

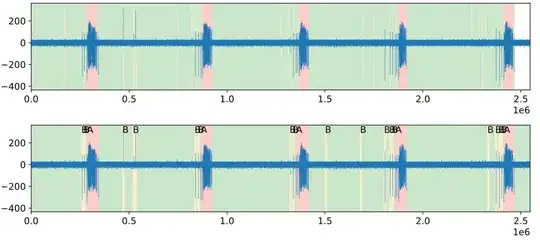

Here you can see on the top the reference model and on the bottom the output of my algorithm.

My question is, I have defined a measure of success function that compares sample by sample the two signals but it is not very accurate and I want to know what is the state of the art in this type of classification problem.

My "measure of success" function:

def measure_of_success(signal_size, result_labels, model_labels):

""" Measure of success function """

incorrect_matching = 0

for index in range(signal_size):

expected_section = identify_section_from_sample(model_labels, index)

result_section = identify_section_from_sample(result_labels, index)

if expected_section != result_section:

incorrect_matching += 1

matching = (1 - (incorrect_matching / signal_size)) * 100

return matching

This function performs a sample-by-sample comparison. My problem is: the labels from the upper picture were set by hand but, I does not matter how accurate the section is determined but just to know that the event has been detected for a considerable time. This is a bit confusing but basically, if I detect the same "b" event and it coincides in time with the majority of the reference model, it should be 100% ok. On the other hand, I feel this measure of success is a bit simplistic and there might be better ways like a confusion matrix or something like that.

What would you suggest me to do in order to evaluate the success of the classification?

Thanks!