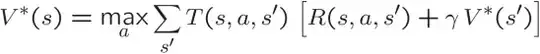

The value iteration algorithm defines the following update rule (reference is slide 11 in this MIT course):

$$V_{i+1}(s) = \max_{a}\{R(s,a) + \gamma E_{s'\sim T(.|s,a)} V_i(s')\}$$

for all states $s$, with $E_{s'\sim T(.|s,a)} V_i(s') = \sum_{s'} T(s'|s,a)V_i(s')$. I adapted the transition probability name from $P$ to $T$ to conform with your notation.

Note the index $i$ for the value function on the right hand side of the equations. That's what enables you to do the computations: the value of the state at iteration $i+1$ is calculated recursively from the values at iteration $i$.

We also have $\max_a R(a,s) =$ reward for reaching the state, given that the reward assigned to each state doesn't depend on the action which was taken in your example. Therefore the value iteration update rule simplifies to:

$$V_{i+1}(s) = R(s) + \gamma \max_a \{\sum_{s'} T(s'|s,a)V_i(s')\}$$

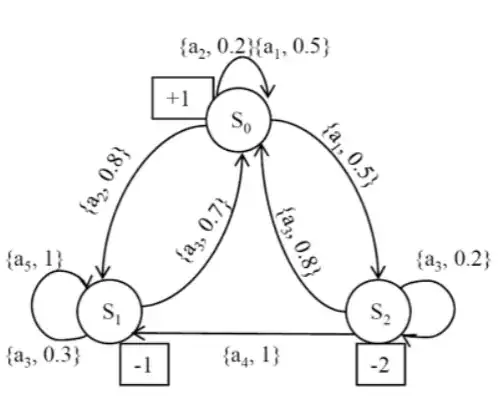

If you initialize $V_0(s)$ to the value of the reward function for that state, we have: $V_0(S_0) = 1$, $V_0(S_1) = -1$, $V_0(S_2) = -2$.

Then for $S_0$:

$$\begin{align} V_1(S_0) = R(S_0) + \gamma \max \{ & T(S_0| a_1, S_0) V_0(S_0) + T(S_2 | a_1, S_0)V_0(S_2); \\ & T(S_0 | a_2, S_0) V_0(S_0) + T(S_1| a_2, S_0) V_0(S_1)\}\end{align}$$

where the first term in the $\max$ is the expected value if you take action $a_1$ from state $S_0$ and the second term is the expected value if you take action $a_2$ from state $S_0$.

This gives, with $\gamma = 0.2$

$$\begin{align}V_1(S_0) & = 1 + 0.2 * \max \{0.5*1 + 0.5 *-2; 0.2*1 + 0.8*-1\} \\ & = 1 + 0.2 * \max \{-0.5; -0.6\} \\ & = 1 - 0.2*0.5 = 0.9\end{align}$$.

Similarly for $S_1$:

$$\begin{align} V_1(S_1) = R(S_1) + \gamma \max \{ & T(S_1| a_3, S_1) V_0(S_1) + T(S_0 | a_3, S_1)V_0(S_0); \\ & T(S_1 | a_5, S_1) V_0(S_1)\}\end{align}$$

which gives:

$$\begin{align}V_1(S_1) & = -1 + 0.2 * \max \{0.3*-1 + 0.7*1; 1*-1\} \\ & = -1 + 0.2 * \max \{0.4; -1\} \\ & = -1 + 0.2*0.4 = -0.92\end{align}$$

And finally for $S_2$:

$$\begin{align} V_1(S_2) = R(S_2) + \gamma \max \{ & T(S_2| a_3, S_2) V_0(S_2) + T(S_0 | a_3, S_2)V_0(S_0); \\ & T(S_1 | a_4, S_2) V_0(S_1)\}\end{align}$$

which gives:

$$\begin{align}V_1(S_2) & = -2 + 0.2 * \max \{0.2*-2 + 0.8*1; 1*-1\} \\ & = -2 + 0.2 * \max \{0.4; -1\} \\ & = -2 + 0.2*0.4 = -1.92\end{align}$$

To get the values at the next iteration, you can reuse the equations above incrementing the indices by one.