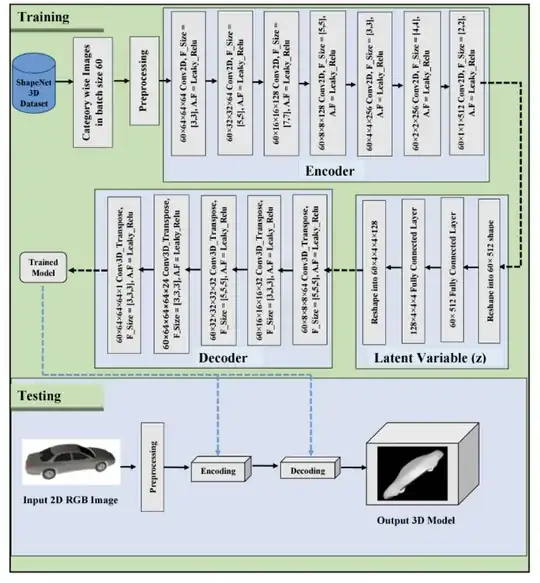

i am currently working on a model to convert 2d images to 3d models through a ml model. For this i have taken into reference a research paper which had this diagrammatical flow of layers & i have tried to put that into work but in vain. Would really like to know where i am going wrong, looking forward for help and suggestions. Thanks!

reference image for model-

my code-

image = Input(shape=(None, None, 3))

# Encoder

l1 = Conv2D(64, (3,3), strides = (2), padding='same', activation='leaky_relu')(image)

l2 = MaxPooling2D()(l1)

l3 = Conv2D(32, (5,5), strides = (2), padding='same', activation='leaky_relu')(l2)

l4 = MaxPooling2D(padding='same')(l3)

l5 = Conv2D(16, (7,7), strides = (2), padding='same', activation='leaky_relu')(l4)

l6 = MaxPooling2D(padding='same')(l5)

l7 = Conv2D(8, (5, 5), strides = (2), padding = 'same', activation = 'leaky_relu')(l6)

l8 = MaxPooling2D(padding='same')(l7)

l9 = Conv2D(4, (3, 3), strides = (2), padding = 'same', activation = 'leaky_relu')(l8)

l10 = MaxPooling2D(padding='same')(l9)

l11 = Conv2D(2, (4, 4), strides = (2), padding = 'same', activation = 'leaky_relu')(l10)

l12 = MaxPooling2D(padding='same')(l11)

l13 = Conv2D(1, (2, 2), strides = (2), padding = 'same', activation = 'leaky_relu')(l12)

# latent variable z

l14 = Reshape((60,512))(l13)

l15 = Dense((512),activation = 'leaky_relu')(l14)

l16 = Dense((128*4*4*4), activation = 'leaky_relu')(l15)

l17 = Reshape((60,4,4,4,128))(l16)

#Decoder

l18 = UpSampling3D(size = (3,3,3))(l17) #-->throws error->IndexError: list index out of range

l19 = Conv3DTranspose(60, (8, 8, 8), strides = (64), padding='same', activation = 'leaky_relu') (l17)

l20 = UpSampling3D((3,3,3))(l19)

l21 = Conv3DTranspose(60, (16,16,16), strides =(32), padding='same', activation = 'leaky_relu')(l20)

l22 = UpSampling3D((3,3,3))(l21)

l23 = Conv3DTranspose(60, (32, 32, 32), strides = (32), padding='same', activation = 'lealy_relu')(l22)

l24 = UpSampling3D((3,3,3))(l23)

l25 = Conv3DTranspose(60, (64, 64, 64), strides = (24), padding='same', activation = 'leaky_relu')(l24)

l26 = UpSampling3D((3,3,3))(l25)

l27 = Conv3DTranspose(60, (64, 64, 64), strides = (1), padding='same', activation = 'leaky_relu')(l26)

model3D = Model(image, l27)

error-

ValueError Traceback (most recent call last)

/tmp/ipykernel_17/3800219535.py in <module>

34 #Decoder

35 l18 = UpSampling3D(size = (3,3,3))(l17) #-->throws error->IndexError: list index out of range

---> 36 l19 = Conv3DTranspose(60, (8, 8, 8), strides = (64), padding='same', activation = 'leaky_relu') (l17)

37 l20 = UpSampling3D((3,3,3))(l19)

38 l21 = Conv3DTranspose(60, (16,16,16), strides =(32), padding='same', activation = 'leaky_relu')(l20)

/opt/conda/lib/python3.7/site-packages/keras/engine/base_layer.py in __call__(self, *args, **kwargs)

975 if _in_functional_construction_mode(self, inputs, args, kwargs, input_list):

976 return self._functional_construction_call(inputs, args, kwargs,

--> 977 input_list)

978

979 # Maintains info about the `Layer.call` stack.

/opt/conda/lib/python3.7/site-packages/keras/engine/base_layer.py in _functional_construction_call(self, inputs, args, kwargs, input_list)

1113 # Check input assumptions set after layer building, e.g. input shape.

1114 outputs = self._keras_tensor_symbolic_call(

-> 1115 inputs, input_masks, args, kwargs)

1116

1117 if outputs is None:

/opt/conda/lib/python3.7/site-packages/keras/engine/base_layer.py in _keras_tensor_symbolic_call(self, inputs, input_masks, args, kwargs)

846 return tf.nest.map_structure(keras_tensor.KerasTensor, output_signature)

847 else:

--> 848 return self._infer_output_signature(inputs, args, kwargs, input_masks)

849

850 def _infer_output_signature(self, inputs, args, kwargs, input_masks):

/opt/conda/lib/python3.7/site-packages/keras/engine/base_layer.py in _infer_output_signature(self, inputs, args, kwargs, input_masks)

884 # overridden).

885 # TODO(kaftan): do we maybe_build here, or have we already done it?

--> 886 self._maybe_build(inputs)

887 inputs = self._maybe_cast_inputs(inputs)

888 outputs = call_fn(inputs, *args, **kwargs)

/opt/conda/lib/python3.7/site-packages/keras/engine/base_layer.py in _maybe_build(self, inputs)

2657 # operations.

2658 with tf_utils.maybe_init_scope(self):

-> 2659 self.build(input_shapes) # pylint:disable=not-callable

2660 # We must set also ensure that the layer is marked as built, and the build

2661 # shape is stored since user defined build functions may not be calling

/opt/conda/lib/python3.7/site-packages/keras/layers/convolutional.py in build(self, input_shape)

1546 if len(input_shape) != 5:

1547 raise ValueError('Inputs should have rank 5, received input shape:',

-> 1548 str(input_shape))

1549 channel_axis = self._get_channel_axis()

1550 if input_shape.dims[channel_axis].value is None:

ValueError: ('Inputs should have rank 5, received input shape:', '(None, 60, 4, 4, 4, 128)')```