I'm working on a simulation of a motor that is attached to a wing (Later, this will also have a real-life counterpart once I'll assemble all the components in our lab), and I can control the forces/torques that the motor applies. I want to use RL and find an optimal action in terms of

"what force should the motor apply to maximize lift".

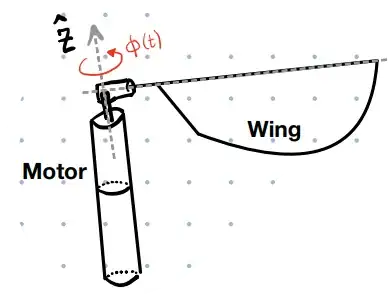

To make things clearer, check out the following figure

So for example, I can find $\phi$ at every single time step $t$ (using some 1st-year physics equations and python integrators) and this will be my state $s$.

Are you familiar with approaches that deal with this problem?