There's a few questions here so I'll address them in turn:

What to do with the results of a K-Fold Cross Validation? Generally, a Cross Validation (or any other form of data partitioning) is used as part of a hyper-parameter seach. Your final model would then be the trained using the best hyper-parameters found during the search, and all of the available training data. This final model should then be tested on a held-out set of testing data, which was not using in any stage of training, to ensure no leakage of information.

How to reduce the Loss? This is a tough question and there is no one direct answer that works in every situation. However improving the performance of a model can be crudely summaraised as: better data, better model, and more regularisation.

Aquiring better data in many contexts is often "more data", but there are analyses that suggest data cleaning and rebalancing can cause signficant improvements, but it's hard to measure these improvements. These must be carried out with care to avoid leaking information between the training/testing sets. It is also almost always the hardest thing to do!

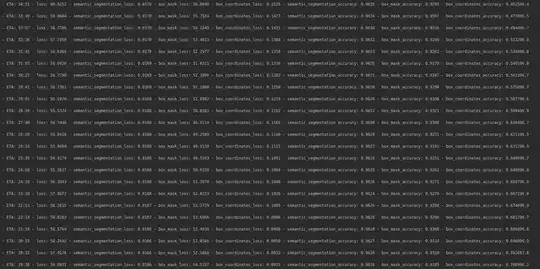

Better models can be achieved by changing your architecture or ensembling multiple models. You've mentioned you're using 3D CNNs on video input, so possible better model options while keeping the same overall achitecture are changing the number of layers and experimenting with different shapes of convolutional kernel.

Finally, more regularisation usually helps avoid overfitting and improves your model's ability to generalise to unseen data. This can be achieved by applying layers such as Dropout, Batch Normalisation, or applying a regularisation constraint to your model's weights.

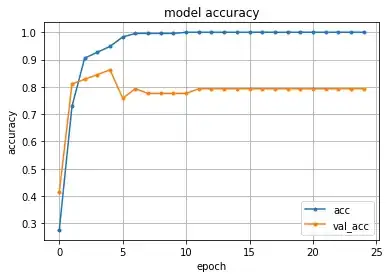

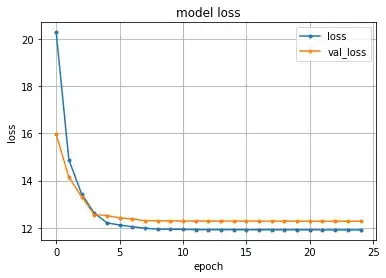

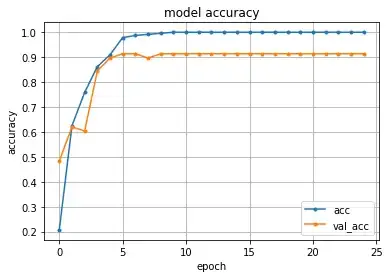

Is it overfitting? Probably, but that's not a death knell for your modelling approach per se. Your graphs show that for these K-fold splits, the performance of the model on the validation set stabilises out as worse than the performance on the training set. If we can assume that the distribution of targets in these validation sets is the same as in the training set, then the model has measurably learned patterns that are unique to the training sets. Thus, overfitting.

However, it's worth checking that this assumption of equal distributions is true - and if not I'd recommend using Stratified K-Fold Cross Validation to ensure that it is true for future experiments to make a fair comparison.

If you re-run with difference architectures, regularisation and stratification (if needed) then the generalised performance of the model may improve. There's a lot of fun experimentation to try from here, and I wish you a healthy dose luck and patience! :)