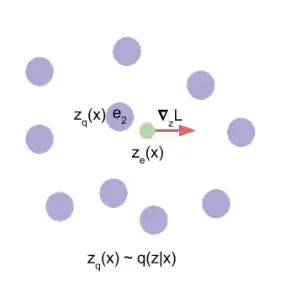

I’m working on a classification problem (500 classes). My NN has 3 fully connected layers, followed by an LSTM layer. I use nn.CrossEntropyLoss() as my loss function. To tackle the problem of class imbalance, I use sklearn’s class_weight while initializing the loss

from sklearn.utils import class_weight

class_weights = class_weight.compute_class_weight(class_weight='balanced', classes=np.unique(y_org), y=y_org)

The below plots show the number of predictions made per class

Here, x-axis represents the class and y-axis is the number of times it occurred (as prediction and as ground truth).

The pattern observed here is that the ground truth and predicted class almost always complement each other. i.e., a class is predicted more times when it’s available less number of times in the ground truth and vice versa (every peak of the blue curve has a dip in orange curve and vice versa). What could be going wrong here?