I am developing an ANN from scratch which classifies MNIST digits.

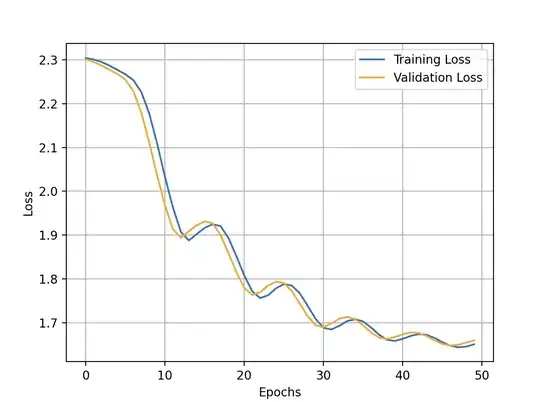

These are the curves I get using only one hidden layer composed of 100 neurons activated by ReLU function. The output's neurons are activated by the softmax function:

Is it correct that training and validation loss are almost identical? Does it mean that my model perfectly fit the data?