Edit:

I managed to fix this by changing the optimizer to SGD.

I am very new to reinforcement learning, and I attempted to create a DDQN for the game snake but for some reason it keeps learning to crash into the wall. I've tried changing the hyperparamters e.g gamma, batchsize, max memory size, and learning rate, and I still seem to get the same result.

The code is in LuaU and is quite long, but if you want to see it here is a link to the github: https://github.com/joejoemallianjoe/DDQN-Snake/blob/main/very%20long%20script.lua

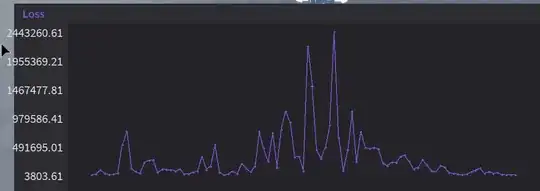

Graphs of loss and reward after around 650 episodes of training or so:

Input structure:

- apple z > head z

- apple z < head z

- apple x > head x

- apple x < head x

- is there an obstacle in front of snake?

- is there an obstacle to the left of snake?

- is there an obstacle to the right of snake?

- is the snake going up?

- is the snake going down?

- is the snake going right?

- is the snake going left?

Reward Structure:

- -10 for crashing into the wall

- +10 for getting the apple

- -1 for moving away from the apple

- +1 for going towards the apple

Hyperparameters / Network configuration

- Gamma is 0.95

- Epsilon decay is 0.995

- Target network update frequency is 32

- Learning rate is 0.001

- Batch size is 64

- Maximum memory size is 10,000

- Optimizer is SGD with momentum, momentum = 0.99

- Loss function is MSE

- The network outputs Q values for going up, down, right, or left

Here is a GIF showing the behavior: