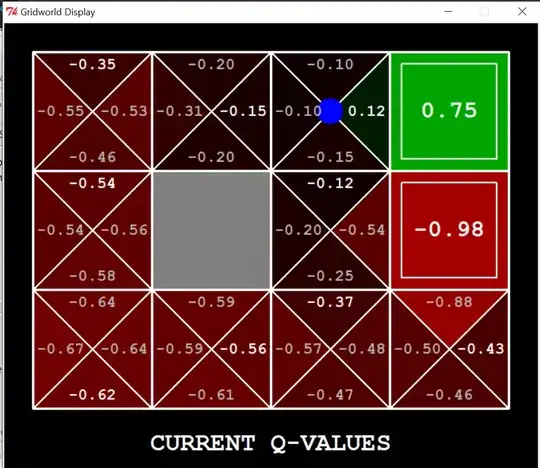

First of all, I am assuming that the values written inside the

terminal states are the rewards and not the values of these states

since we don't have $q$-values calculated for these states like the

other states.

Correct. The value of a terminal state is zero by convention. This topic is beyond the scope of the question, so I will provide a link to a relevant AI Stack Exchange question with explanation.

If you see for example the green terminal state, at time $t$ the reward

is equal to 0.75 but after some time it has converged to 1. So can

we have a changing reward in an episodic task, and if so, why?

Definitions: This gridworld environment, along with most RL environments, possesses an underlying Markov decision process (MDP). Using the Wikipedia definition, an MDP is endowed with a state space $\mathcal{S}$, an action space $\mathcal{A}$, a state transition function $P_a(s, s')$, and a reward function $R_a(s, s')$. The reward function details the reward $r$ received by taking action $a$ in state $s$ and resulting in next state $s'$. In the case of finite MDPs, see equation 3.6 of Sutton and Barto for the mathematical formulation of the reward function.

Answer: To answer your question, yes, there can exist changing rewards in an episodic task. This amounts to having an underlying nonstationary MDP, where the reward function changes over time. In this case, the reward function may be viewed as stochastic instead of deterministic since the realized reward of transitioning to a state can attain various values. Another possible viewpoint is that the reward function would be deterministic if the state were to encode the current time $t$ and if the reward function of $a$, $s$, $s'$, and $t$ were deterministic. This is an example in which encoding more relevant information into the state when designing the MDP could increase the speed and performance of the learning algorithm.

Example: As a toy example, assume that your gridworld problem is to navigate to a specific beach by the ocean. In this problem, each episode is one day, and the reward is the happiness or recreational value achieved at the beach. Perhaps the terminal state with positive reward is the correct beach, and the terminal state with negative reward is the wrong beach (assume that navigating to the wrong beach spoils your day and provides a large negative reward). In this case, the reward function for reaching the terminal state of the correct beach may not be constant and could be dictated by external factors such as the weather, occupancy, entertainment, availability of amenities, etc. For example, having bright, sunny weather may provide a high positive reward, while having gloomy weather may provide low positive reward.

Conclusion: Nonstationary reward functions can easily occur in environments that possess a temporal aspect such as the stock market, retail sales, an electrical grid, etc. The state transition function could also be nonstationary, for instance, when playing against increasingly better opponents in board games such as Go (e.g. self-play algorithms). Many of the factors discussed above make it especially important to understand and investigate the MDP before designing a corresponding learning algorithm.