It seems to me that the Generative Adversarial Networks have a practical issue when trying to reproduce some of their output images

For example, as you can see https://www.youtube.com/watch?v=oIzwe_MOeQI&t=1057s seems to generate shape and background changes as it changes rotations.

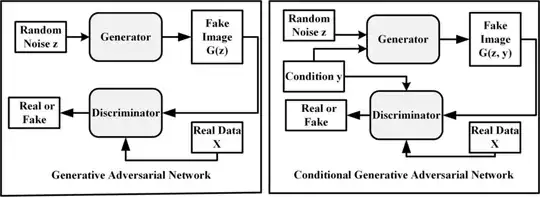

Can any of the StyleGANs be convinced to take an image of a car, along with some sort of key that says how it is rotated then consider that when the trained model is generating?

Obviously, it might be a substantial effort to label the data, but I'm curious if it's been done in a well-published fashion.