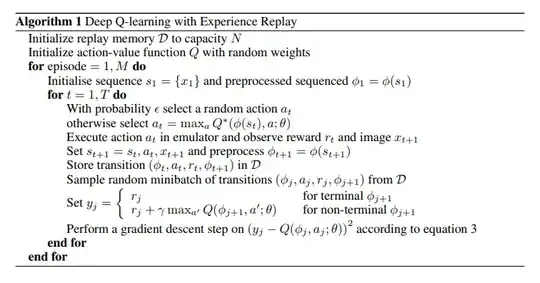

I was reading this article https://www.cs.toronto.edu/~vmnih/docs/dqn.pdf and in it there is an algorithm of deep q learning with experience replay as follows:

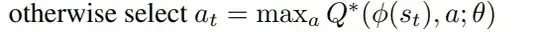

On line 12, when the algorithm is setting the values for y_j, the second line says:

I'm confused as to what a' refers to and where it comes from.

(Edit) Why on this line (line 7) it's a:

But on line 12 it's a' ?

Can someone please explain it to me?