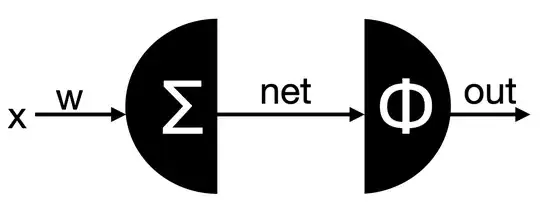

Assuming a single perceptron (see figure), I have found two versions of how to use backpropagation to update the weights. The perceptron is split in two, so we see the weighted sum on the left (the output of this is net) and then the sigmoid function phi on the right (the output of this is out).

So for the backpropagation portion, we compute $\frac{\delta cost}{\delta w}=\frac{\delta cost}{\delta out}\times \frac{\delta out}{\delta net} \times \frac{\delta net}{\delta w}$, to find how the weight affects the error.

For the portion $\frac{\delta out}{\delta net}$ I have seen three different versions for how to compute the value:

- $\frac{\delta out}{\delta net}$ = $\phi'(out) = \phi(out) \times (1 - \phi(out))$

- $\frac{\delta out}{\delta net}$ = $\phi'(net)= \phi(net) \times (1 - \phi(net))$

- $\frac{\delta out}{\delta net}$ = $out \times (1 - out)$

Can somebody explain to me which one is correct and why? Or is there one which should be preferred?