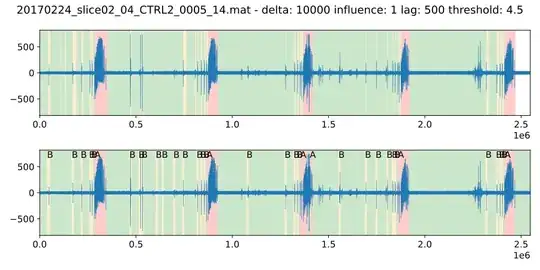

I am working on a classification algorithm for brain rhythms. However, when I implemented the metrics for precision, accuracy, F1 score and recall. My results show that my algorithm has a high precision but a low recall.

I am not expert on this kind of metrics and analysis and I don't know if it makes sense to have a high precision but a low recall. What does it mean?

This is my reference and output models.

"A": {

"FN": 5,

"FP": 0,

"Jaccard Index": 0.5454545454545454,

"TP": 6,

"f1-score": 0.7058823529411764,

"precision": 1.0,

"recall": 0.5454545454545454

},

"B": {

"FN": 34,

"FP": 5,

"Jaccard Index": 0.38095238095238093,

"TP": 24,

"f1-score": 0.5517241379310345,

"precision": 0.8275862068965517,

"recall": 0.41379310344827586

},

"C": {

"FN": 39,

"FP": 9,

"Jaccard Index": 0.36,

"TP": 27,

"f1-score": 0.5294117647058824,

"precision": 0.75,

"recall": 0.4090909090909091

},

"SNR": 28.121645860790924