An Encoder has as inputs : Q,K,V, but has single output i.e. 3 vs 1

How do you stack those ?

Is there more detailed diagram ?

An Encoder has as inputs : Q,K,V, but has single output i.e. 3 vs 1

How do you stack those ?

Is there more detailed diagram ?

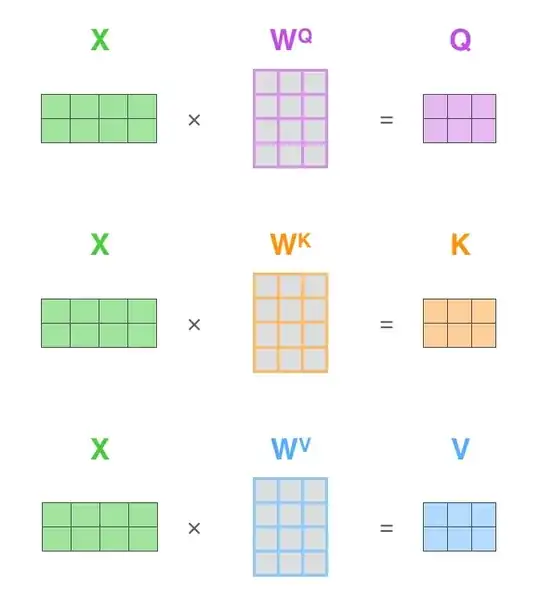

One encoder block of the transformer takes as input one tensor X and multiplies that by $W_Q$, $W_K$, $W_V$ to calculate $Q$, $K$, $V$ needed in self-attention.

After performing attention and feed-forward this one encoder block returns a single $X'$ ready to be taken as input for the next encoder block.

I find this specific post really helpful:

https://jalammar.github.io/illustrated-transformer/

You can find there this image, which shows that from one single input X, we calculate $Q, K, V$. Learning those weight matrices $W_Q$, $W_K$, $W_V$ is part of the training of an encoder.