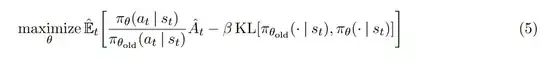

In the original paper, the objective of PPO is as follows: . My question is, how does this objective behave in a sparse reward setting (i.e., reward is only given after a sequence of actions were taken)? In this case we don't have $\hat{A}_{t}$ defined for every $t$.

. My question is, how does this objective behave in a sparse reward setting (i.e., reward is only given after a sequence of actions were taken)? In this case we don't have $\hat{A}_{t}$ defined for every $t$.

Asked

Active

Viewed 129 times

0

Sam

- 175

- 5

-

Why won't we A_{hat}_{t} for every t? There is usually a tuple of (state, reward, next_state) associated with every time step t. For sparse reward settings, the reward will be 0 for non-reward states. – desert_ranger Mar 06 '23 at 01:40

-

@desert_ranger yes you can think of reward as 0 for those states, but in some situations it might be undefined – Sam Mar 06 '23 at 10:58

-

It is the user who designs the reward for each step. Therefore, as long as the environment is formulated correctly, this shouldn't happen. – desert_ranger Mar 06 '23 at 23:28

-

@desert_ranger think of Go. By default, not every move has a reward assigned. Are you suggesting going down the reward-shaping route to introduce artificial rewards? – Sam Mar 07 '23 at 02:45

-

A reward _must_ exist for every $t$. As we are operating in an MDP, each $t$ corresponds to a transition from a state $s$ to another state $s'$ given an action $a$. By the definition of the MDP, a reward _must_ be associated with this transition, otherwise you are not working in a proper MDP. This is true for the game of Go. I believe the work by Deepmind just assigned a score of +1 for winning, -1 for losing, and every intermediate step was assigned a reward of 0. – David Apr 05 '23 at 10:23

-

1@DavidIreland yeah so to fit into MDP framework, can assign dummy reward 0 to the intermediate states. – Sam Apr 06 '23 at 14:06