I am taking ML course and I am confused about some derivations of math

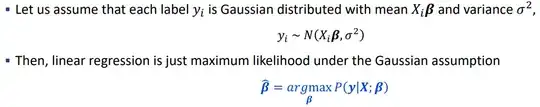

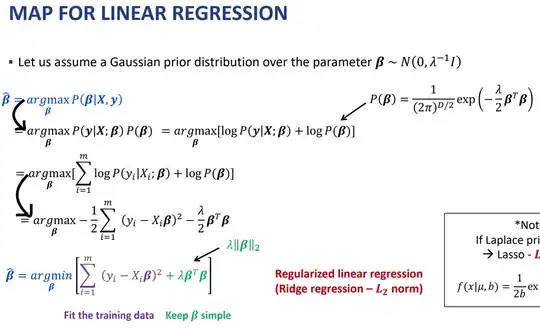

Could you explain the two steps I marked on the slides? For the first step, I thought $P(beta|X,y) = \frac{P(X,y|beta)P(beta)}{P(X,y)}$ but I don't know the further steps to reach the next step. Maybe I am confused of the conditional notation and semicolon notation.