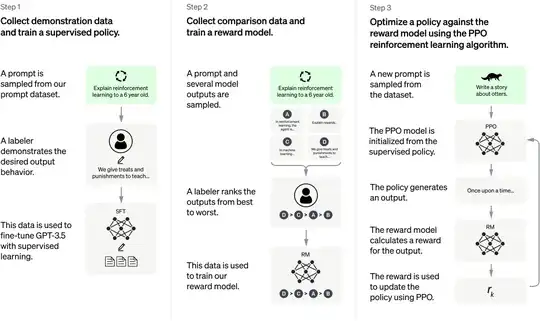

According to OpenAI, ChatGPT is trained in a 3-step process.

Are the steps where human AI trainers are involved, i.e. training the initial policy and providing the A>B>C>D grading as training sets for the reward model, the ONLY place where actual knowledge is entered? Are there no other learning steps where human trainers are NOT involved and the model learns from high-quality sources like authoritative texts? The sampled prompts in step 2 are supposed to cover EVERYTHING on the internet, so what happens when the trainers do not know anything about the topic? Did OpenAI invite domain experts to grade specific prompts-answer pairs?

Are the steps where human AI trainers are involved, i.e. training the initial policy and providing the A>B>C>D grading as training sets for the reward model, the ONLY place where actual knowledge is entered? Are there no other learning steps where human trainers are NOT involved and the model learns from high-quality sources like authoritative texts? The sampled prompts in step 2 are supposed to cover EVERYTHING on the internet, so what happens when the trainers do not know anything about the topic? Did OpenAI invite domain experts to grade specific prompts-answer pairs?

Asked

Active

Viewed 414 times

1

Rexcirus

- 1,131

- 7

- 19

Meatball Princess

- 113

- 3

-

This is impossible to answer as there is no more public information on how ChatGPT was trained. – Dr. Snoopy Mar 13 '23 at 11:15

-

Please edit the question to limit it to a specific problem with enough detail to identify an adequate answer. – Community Mar 13 '23 at 15:51

-

I think their prompt dataset contains half the Internet. – user253751 Mar 13 '23 at 22:08

1 Answers

1

Step 2 is (mainly) useful to tune the style, alignement and general feeling of the ChatGPT answers, not the specifics of what is answered.

The actual "knowledge" comes simply from ingesting a large corpora of text as training dataset and using unsupervised learning technques on it. This knowledge has been encapsulated in the GPT3.5 on which ChatGPT is built on. To be super clear: ChatGPT is a specialised version of GPT3.5.

Rexcirus

- 1,131

- 7

- 19