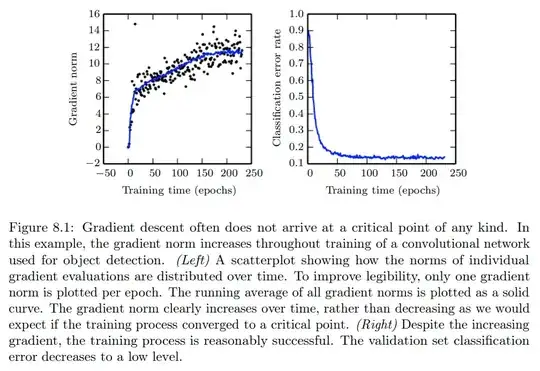

This is from deep learning book by Ian Goodfellow and Yoshua Bengio and Aaron Courville. When training converges well, I thought the gradient should be at local minima. But the book says it often does not arrive at the critical points. Could you explain me why and how it can occur?

Thank you