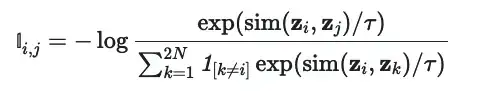

I have a little perplexity about the NT-Xent loss employed in self-supervised contrastive learning.

What we are essentially doing is maximizing the similarity of pairs of augmented images while minimzing the similarity with all the other instances in a single batch. if $j$ is an index corresponding to positive example for the index $i$, then I don't understand why it gets included in the denominator. Shouldn't we consider the indicator function $\textbf{1}_{\{k \neq i\} \cap \{k \neq j\}}$ instead?