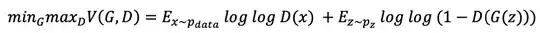

In the book Generative AI with Python and TensorFlow 2 from Babcock and Bali (page 172), it is stated that the value function of a GAN is the following:

where D(x) is the output of the discriminator and G(z) is the output of the generator. However I don't understand why there is a product of two logarithms. The value D(x) is supposed to be a probability, meaning that D(x) lies in the number interval between 0 and 1. Having that into account the log D(x) would be a negative number, so log log D(x) shouldn't exist because the log of a negative number doesn't exist.

Can anyone shed some light into this? Is the function wrong or is there anything I am missing?