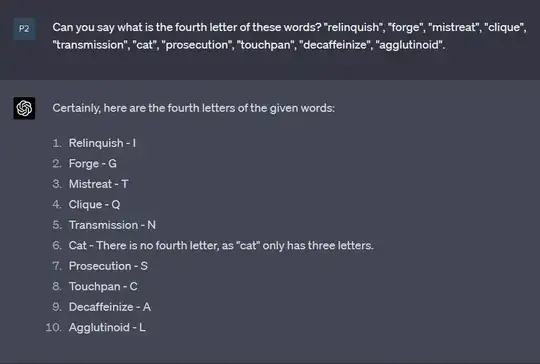

I understand that GPT models process input text by converting words into tokens and then embedding vectors and do not process them letter by letter. Given this approach, I am curious to know how a model like ChatGPT can identify the first (or n-th) letter of a given word. Can anyone explain the underlying mechanism or provide any insights on this capability?

- 534

- 3

- 10

-

Please check the 'how can a transformer do X' section of the following post: https://ai.stackexchange.com/questions/40179/how-does-a-transformer-or-large-language-model-work/40180#40180. – Robin van Hoorn Apr 24 '23 at 07:32

-

1It has to learn "cat" starts with "c" just like it has to learn that cats are animals. This is probably not a particularly difficult task since the Internet contains lists of words starting with letters. – user253751 Apr 24 '23 at 21:34

-

@user253751 I edited the post. Do you believe there is a list on the web indicating that "L" is the fourth word of "agglutinoid"? – Peyman Apr 25 '23 at 04:53

-

I bet not. Thus, two possible options are: character processing, before or in parallel to tokenizing; data augmentation of the corpus, with generation of about one million sentences of the form "The

letter of – Jaume Oliver Lafont Apr 25 '23 at 05:08is ". About one million would come from about say 100000 words x 10 characters/word. -

A more involved approach would come from using some positional encoding of words, if say "cab" was encoded as 3x27^2+1×27+2. The operation nth letter would require integer division and modulo operation on this number equivalent of the word. – Jaume Oliver Lafont Apr 25 '23 at 05:16

-

@JaumeOliverLafont the chance that OpenAI did any of those things is approximately zero – user253751 Apr 25 '23 at 20:17

-

So? What are your thoughts about the question? In particular, do you think there are resources where "agglutinoid" is listed to have an L in fourth position? – Jaume Oliver Lafont Apr 26 '23 at 05:20

1 Answers

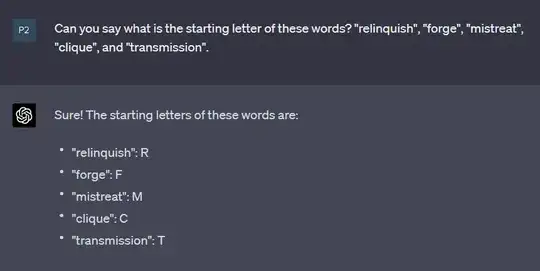

It's actually tokenizing each letter. That is the reason why asking it to count the number of letters in more than a few words will result in basically a guessing game, and it will more often than not result in wrong answers.

Now, if you prompt it to follow a more resource demanding methodology (1) identify the words one by one of x text, (2) then number each letter within the identified words, (3) then summarize the numbers of letters within that particular word, then (4) start over with the next word and (5) continue until you reach to the under of the text, then it will only be able to process maybe 10 to 15 average length words. It has to express the steps in concepts (tokens) including every single letter, then associating a number to that etc.

When you do the above, you will experience GPT-4 either simply cutting the cr—p, and saying, "[a]nd this is how you continue until you finish", or if you keep insisting that it go through the entirety of text x, then it will run out of tokens, and leave production unfinished—when you have to prompt again "Continue", or "carry on", etc.

This would either mean that GPT-4 in ChatGPT does not actually have 4,096 tokens (it does!), or that doing these tasks are extremely token demanding.

This is a high-level understanding of the issue, but it generally points at the fact that it doesn't work it out from a pre-existing "database" that was sort of hardcoded in the model; it doesn't call the result which is actually shown in the initial wrong answers when it sort of guesses instead of following a strict methodology to avoid errors. (If it did have that, the initial answer would be correct, and it wouldn't be so resource-demanding to actually run the "math" through this task.)

If needed, I can provide an entire example chat of this behavior.

-

“It's actually tokenizing each letter”—where did you get this from? I find it [unlikely](https://en.wikipedia.org/wiki/Byte_pair_encoding). – HelloGoodbye Jul 22 '23 at 01:36

-

-

Well, the language model doesn’t decide itself how the tokenization should happen, and the tokenizer doesn’t know whether the language model is trying to count letters, so that cannot be the case. – HelloGoodbye Aug 25 '23 at 19:06