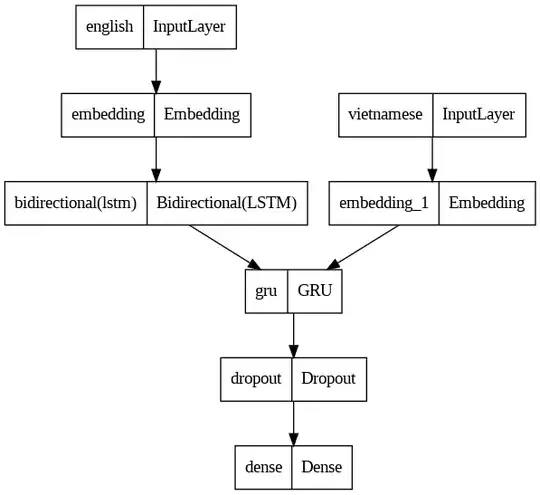

I am practicing machine translation using seq2seq model (more specifically with GRU/LSTM units). The following is my first model:

This model first archived about 0.03 accuracy score and gradually improved after then. It seems normal.

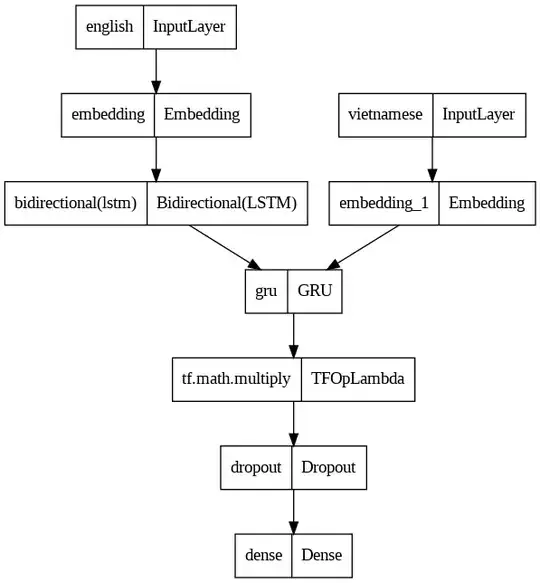

But when I multiply the decoder's GRU output by 2 (as the following picture) ,

the model accuracy becomes so good (>0.9) at the very first epoch of training process (more specifically the first batch). I think it must be wrong somewhere. Can anyone give me an explaination for this?

,

the model accuracy becomes so good (>0.9) at the very first epoch of training process (more specifically the first batch). I think it must be wrong somewhere. Can anyone give me an explaination for this?