I have a Deep Learning Regression model to predict some values. The results are fine when I use the model in Evaluation Mode, but when I turn Training Mode on the model tends to overestimate the predictions. (I need to use the model in training mode since I want to use Monte Carlo Dropout to estimate a probability/uncertainty for the predicted value.)

My Regression model looks like this (I use PyTorch 2.0)

class Regression(nn.Module):

def __init__(self, num_outs):

super(Regression, self).__init__()

self.fc1 = nn.Linear(1024, 512)

self.fc2 = nn.Linear(512, 256)

self.fc3 = nn.Linear(256, num_outs * 3)

self.bn1 = torch.nn.BatchNorm1d(512)

self.bn2 = torch.nn.BatchNorm1d(256)

self.dropout = torch.nn.Dropout(0.2)

def forward(self, x):

x = self.dropout(x)

x = F.relu(self.bn1(self.fc1(x)))

x = F.relu(self.bn2(self.fc2(x)))

x = self.fc3(x)

return x

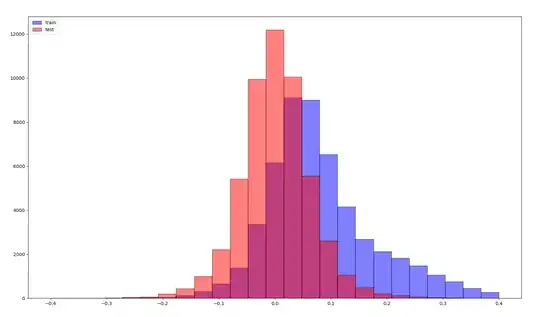

If I plot the Training and Evaluation error I get this histogram. As expected the test error is normal distriubuted around 0. However the mean of the train error is moved to the right, which shouldnt be happening. While researching I read this article which describes a similar effect but the other way around, which makes sense to me but my behaviour is really strange since the model should optimize in train mode. I train and test on different datasets, but I get the same effect when I test on my training dataset.

I would be very thankful if someone could point me in the right direction to tackle this problem.