It is just one huge model which performs autoregressive text generation.

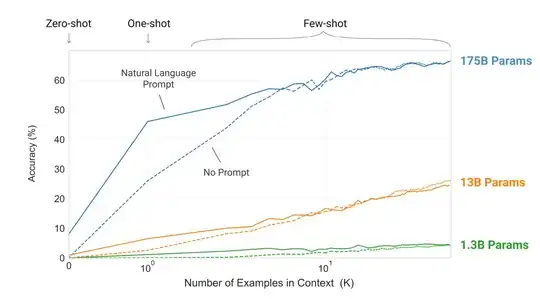

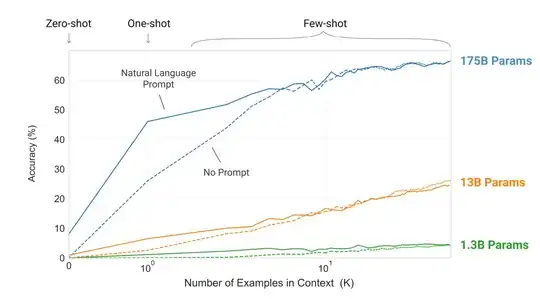

The ability to perform a wide variety of task, defined at inference time is called in-context learning and was introduced in the GPT-3 paper.

The underlying idea is that during self-supervised pretraining, the model "sees" a huge variety of sequences and tasks, and learns to recognize very high-level patterns which identify the tasks (i.e the model is able to recognize the task even if we specify a different syntax ), and how the tasks are performed. This knowledge is used at inference time to perform the correct task.

This ability is largely dependent with model size and emerges at hundreds of billions of parameters

The task to perform is inferred from the context, i.e the text which specifies the desired task.

The context may be composed of a description of the task, followed by

- A few examples of desired output (few-shot approach)

- Only of the structure the output needs to follow (zero-shot approach).

(Maybe it's easier to answer the question if there is a specific and specifically trained transformer for each supported language.)

A huge problem is that you need a specific, large dataset for each language. That means if you want to obtain a similar performance in all language, you should have a dataset of roughly the same size in each language, but it is not the case for many datasets. For example, C4 dataset has 4B english samples, but only 545M in German. One way Large Language Models can overcome this issue is by exploiting the knowledge provided by many languages, learning to reason and align this knowledge in the destination language.

BTW: Why is the task "to follow instructions" (which InstructGPT is said to be specialized for) a task on its own? Isn't every prompt an instruction in a sense, instructing Chat GPT to perform some downstream task?

Yes, but there are many ways to answer, and there is no standard way to define what a "good" answer is since it may be task and context-dependent, so it would be hard to train a good model which gives high-quality responses to a wide variety of tasks.

To add these additional supervising signals of "goodness", they used Reinforcement Learning, avoiding to define a specialized loss for each task (which could be intractable) and using human feedback instead.This is a very important part of ChatGPT and explains its better performance compared to GPT-3.