Could you provide the explanation of Figure 4 from the paper at Improved Denoising Diffusion Probabilistic Models?

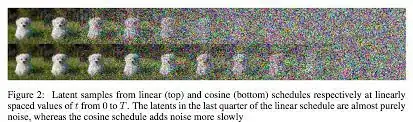

(1) The paper says, "the end of the forward noising process is too noisy,and so doesn’t contribute very much to sample quality". But if the goal is to have an image with only noise, why is it problematic to have a lot of noise?

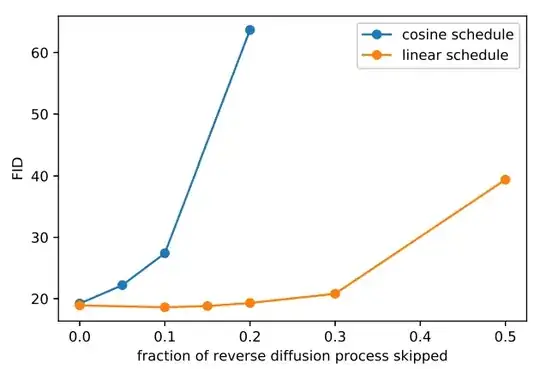

(2) The paper says, "a model trained with the linear schedule does not get much worse (as measured by FID) when we skip up to 20% of the reverse diffusion process". But why is it the reverse diffusion process? Shouldn't the deterioration be related to the forward process?

(3) Also, why is the training process relevant?

It seems to me that the explanation of this section means 'linear noise schedule is better than cosine one'. Could you explain, please?