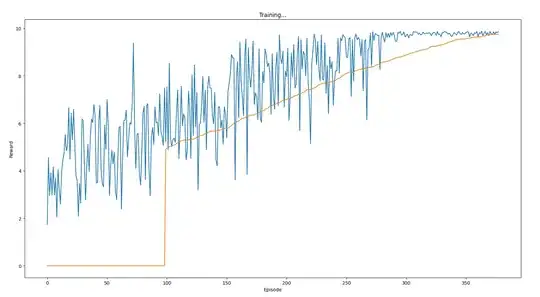

I'm currently training a DQN agent. I use an epsilon greedy exploration strategy where I decay the epsilon value linearly until it reaches 0 over 300 episodes. For the rest of the remaining 50 episodes, epsilon is always 0. Since the value is 0, I thought that the agent would always select the same actions throughout the training but that does not seem to happen since the reward of the last 50 episodes is not exactly the same as can be seen by the graph below. The blue graph is a plot of the reward for each episode and the orange one is the average reward of the last 100 episodes.

Is increasing the number of episodes after epsilon reaches 0 a correct solution for this? I have not tried this as each episode takes approximately 20 seconds to complete, so the training can become really long. Thanks in advance!