I've been using AI image generation for a while now, and I've noticed how profoundly AI doesn't seem to see the image as a whole, sometimes generating an image with parts of fingers floating near objects supposed to be being held, VERY warped perspective, and of course, extra fingers and occasionally legs. I also notice that by negative prompting 'extra/distorted limbs' (or similar)it often solves the problem, so it appears that there must be some sort of awareness of what is happening in the image. Stable Diffusion being open source and thus available for analysis, why would AI specifically have this as a common problem?

-

There are lots of image-generating technologies, making this question very broad. Perhaps pick a single popular and accessible image generation model? Stable Diffusion might be a good choice to get a factual answer, since it is open-source, so no-one has to guess at what proprietary systems might be in use. It is also one of the more popular recent releases of image generators – Neil Slater Aug 28 '23 at 14:02

-

1@neilslater The problem is, I'm using a forum, NightCafe, which hosts many, from Dall-e to Stable Diffusion. I have noticed the 'dismembering' on SD, so I suppose that'd work. An edit is on it's way. As well as some further points. thanks! – ben svenssohn Aug 28 '23 at 14:05

-

3Stable Diffusion (and the others) don't "see" anything. Simply put, they are iteratively denoising pure noise until it "makes sense". It's not like it created a 3D space or layers of foreground and background. It is guided by the prompt because it was trained to use that as part of the denoising context, but it doesn't really "understand" anything. – Camilo Martin Aug 28 '23 at 23:17

-

1Presently generative AI for images does not understand the meaning of fingers as physical 3D anatomical structures that have a range of mobility/posability. It only has a bin of imagery to draw from that is *suggestive* of fingers. Kind of like how you can't look at your hands in a dream and make sense of their detail (one method people use to go "lucid"). To correlate the relationship between a 3D mesh and a 2D image, a dataset of 2D/3D pairings must be provided. If trying to teach a machine what the physical world *feels* like, touch sensor data is needed etc, to expand domain of experience. – Mentalist Aug 29 '23 at 01:27

1 Answers

Let's start from the problem associated with generating details in images, probably as old as computer vision itself.

Consider an image of a person of classic size 512x512 pixels, one finger in that image will probably consists only of 10 of those pixels. Whatever loss you might use, the contribution coming from the reconstruction of 10 pixels will be most of the time irrelevant compare to the contribution coming from other elements in the image. Let's also remember that most architectures today rely on convolutions, meaning that those 10 pixels will be averaged together trough some pooling operation, making them count even less. This should give the overall idea about way in general even state of the art models fails with high details.

Diffusion models add even more complexity to the overall picture because they start from random noise and they iteratively subtract a different noise distribution learned during training to produce something that make sense. So not only they have the hard task to learn details, they also have to learn to distinguish between noise that's actual noise to remove, and details that sometimes do resemble noise but that we want to keep.

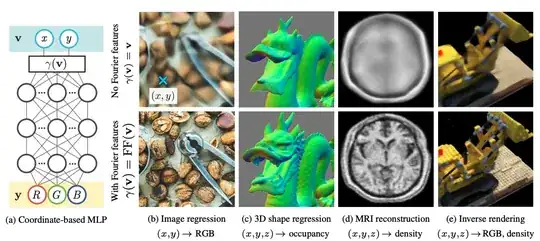

To understand the connection between noise and details it useful to know that in the literature image details are often referred to as high frequency functions. It's actually an on point definition rather than just an analogy. The frequency of a wave relates to its wavelength, basically how much space it need to complete a full cycle, in an image you can think of the frequency of an element exactly the same way, i.e. how much space in the image the element need to end or repeat itself (I know it more complicated than this, but we care about the gist). This very nice paper shows how it become suddenly easy for simple architectures to reconstruct high details when random Fourier transformation are used for feature extraction (basically high frequency random functions). The image below is taken from the paper. This unfortunately comes with huge increase in computational power and it not a real feasible solution for large models like stable diffusion.

One last consideration should be done about the negative prompts. Unlike purely visual models, text to image models try to learn a semantic latent space, i.e. they try to associate specific latent vectors with elements that the output image have (during training) or should have (during inference). Moreover, this latent space for the most part have nice geometric properties, like vectors of word of opposite meaning have opposite direction. Now, stating that something should be in the generated image gives absolutely total freedom to a model about extra elements that could be potentially present. The weights of a model are always used all at once, the text prompt simply causes some weight vectors in the latent space to have more impact than others. This is why negative prompt are an easy solution to remove artefacts (to some extent), you're just constraining the model more. The model has already learned that most of the times a hand has 5 finger, but since a hand it's a rather detailed element to generate and because it's not explicitly sated in the prompt that we want 5 fingers, a model has more than enough space for random manoeuvre to end up generating aberrations.

- 5,153

- 1

- 11

- 25