Last semester I, along with my team, made a project on OCR.

Note: I am assuming the data set for your pictures has white

background with black (or some other dark text) on it.

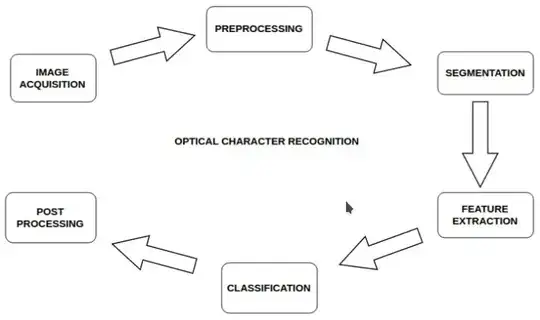

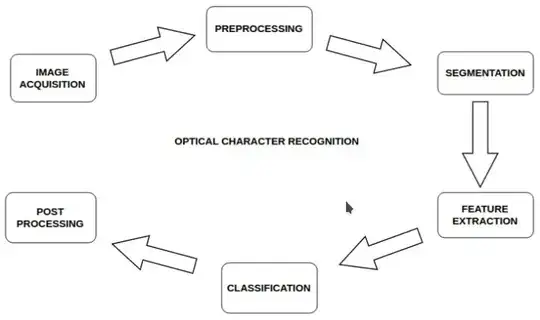

These are the overview steps we followed:

Pre-processing includes grayscale conversion, noise reduction,

binarization and skew detection.

Next step was segmentation. This process extracts the individual

characters from the image. Histogram taken along the y-axis divided

the image into lines. This is followed by histogram along the x-axis

which divided them into words and further into characters.

At the end of the step, we used Savgol filter to smooth the curves of the histogram.

Next step was feature extraction. This is the most important step.

The accuracy of your code depends on how well your features are.

We used the following features:

- Crossing: Counting number of transitions between foreground and background. We used

two diagonal lines, two horizontal and one vertical line. You can used any number you want.

- Zoning: Whole character region is divided into 16 zones, and density of each zone is measured.

- Projection Histogram: Each character has unique (almost) vertical and horizontal histogram signature.

- Other features include number of endpoints in the character, number of loops and horizontal/vertical line count.

We used three different classification algorithms for our project. They were KNN (K-Nearest Neighbours), Artificial Neural Network (ANN) and Extra Tree classification. Their F1 score was 0.84, 0.82 and 0.77 respectively.

For training, you will need to find datasets. Many data sets for OCR was available online. Make sure you are using good ones.