Trying to understand the VGG architecture and I have these following questions.

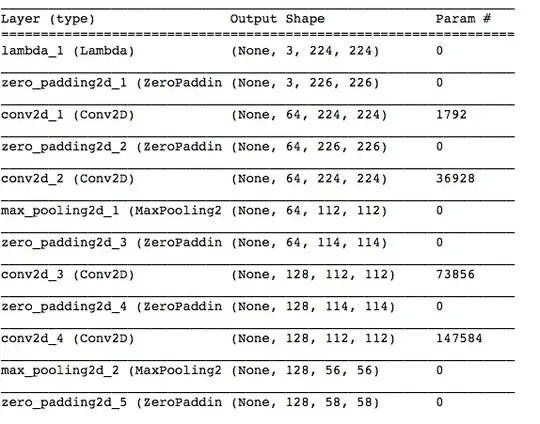

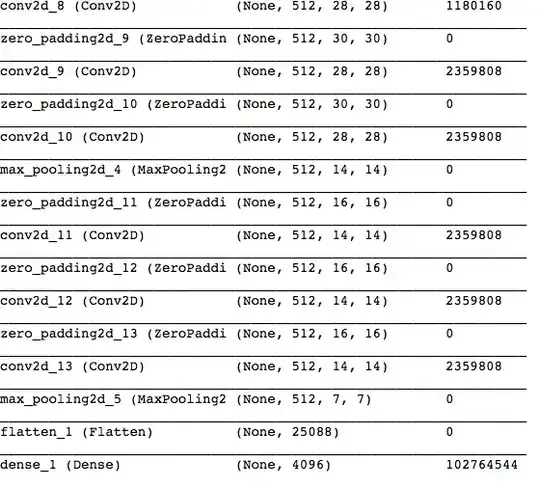

- I understand the general understanding of increasing filter size is because we are using max pooling and so its image size gets reduced. So in order to keep information gain, we increase filter size. But the last few layers in the VGG architecture, the filter size remained same when vgg was max pooling from 14x14 to 7x7 image size, the filter size remained same at 512x512. Why wasn’t there the need to increase filter size there?

- Also few consecutive layers, in the end, was constructed with both same filter and image size, those layers were built just to increase accuracy? (experimentation?)

- And I couldn’t wrap around that visualization at final filters have the entire face as the feature as I understood through convolution visualizing (Matt Zieler video explanation). But max pooling causes us to see only a subset part of the image right? When filter size is 512x512 (face as the filter/feature) the image size as 7x7, so how does entire face as a filter will work on images when we are moving over small subset of the image pixels?