I have a binary classification problem, where a false positive error has a very big cost compared to the false negative error.

Is there a way to design a classifier for such problems (preferably, with an implementation of the algorithm)?

I have a binary classification problem, where a false positive error has a very big cost compared to the false negative error.

Is there a way to design a classifier for such problems (preferably, with an implementation of the algorithm)?

There is no predefined classifier for any problem. Two main features of a classifier are

Since your problem statement requires a huge cost for falsely classifying a particular class one approach will be.

You have to define a cost function that will penalize hugely for misclassifying for that class only. So your cost function will be $J$ and $J'$ put together. You can look up the cost function of a logistic classifier to see how two separate cost functions are merged together here.

The second approach can be (assuming you are using supervised learning), the learning rate $\alpha$ for both the classes should be different. The larger learning rate will be for the one which is the more important class, since you don't want to classify it improperly (increasing $\alpha$ compared to the other class will reduce or risk of misclassifying it). The exact learning rate depends from case to case.

Thus, I have tailored the two main features of the classifier to solve this problem:

The cost function.

The weight update scheme (by changing learning rate for different cases).

@DuttaA has pretty much mentioned the two most appropriate approaches to having this facility. Either the penalty of false positives should be high or the learning rate for the correct class should be high.

I'll give two real-life examples to help you understand it better.

Say you have to teach a teen that substance abuse is injurious to health (eg. Frequent smoking is a negative habit). But the teen ends up learning from high effects of the drugs that it is good (false positive) and gets addicted to it. You would strictly want to avoid this kind of a situation (false positive error having a very big cost as compared to false negative error).

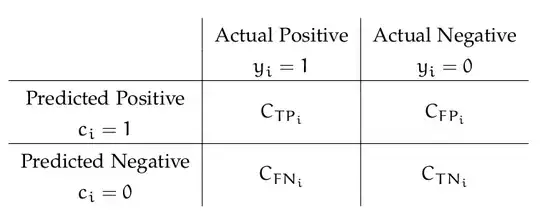

In general, to model the situation when the costs are different, we picture a cost matrix. For a two-class classification problem, the cost matrix would look like:

(courtesy: http://albahnsen.com/CostSensitiveClassification)

(courtesy: http://albahnsen.com/CostSensitiveClassification)

Now, when designing your cost function, you would want to take into account the weight corresponding to each of the situation. A simple python code would be as follows:

def weighted_cost(pred, act):

if pred==P and act==P:

return C_TP * cost(pred, act)

if pred==P and act==N:

return C_FP * cost(pred, act)

if pred==N and act==P:

return C_FN * cost(pred, act)

if pred==N and act==N:

return C_TN * cost(pred, act)

Where, pred is the predicted class and act is the actual class. Here, C_TP, C_FP, C_TN, C_FN represent the weights of true positive, false positive etc. The cost(pred, act) function will calculate the loss of one training example. You would want to use the weighted_cost function for finding the loss after one training example.

The second approach that @DuttaA mentioned was to vary the learning rate. In real life, you can relate this to the situation when you were asked to write a word multiple times if you forget its spelling so that you remember it better. In a way, you learn the correct spelling of the word.

Here, increasing the value of the learning rate (say 4 x alpha) for a class can be viewed as updating the value of the weights multiple times (4 times) with the old learning rate (alpha), similar to what we do by writing the correct spelling of the word multiple times. So, a more important class (in your case it will be the Negative Class) should be given more alpha, because a false positive (misclassification of the negative class) has a high penalty. You learn to recognize the correct (negative) class by learning it more number of times (as in the case of learning the spelling of the word).

Let me know if you need any further clarification.

A funky way of doing this with less overhead is to just over fit the data up to some degree. The reason is when you try to over fit the data with the classifier the classification bound tends to wrap around the clusters very tightly and with that model you can some times miss classify positive classes as negative(due to high variance) but there are comparatively less situations where you end up miss classifying negative classes as positive. The level of overfitting that needs to be performed is just based on your FP and FN trade off.

I don't think this as a permanent fix but can come handy up to some extent.

One more idea - I recall learning about Neyman-Pearson task in my studies. It is a statistical learning method for binary classification problem where overlooked danger (false negative error) is much unwanted.

You set a desired threshold for false negative error rate and then minimize false positive error. You just need to measure conditional probabilities of each class. It may be expressed as a linear program and solved to get the optimal strategy for the threshold of your choice.

You could vary an error coefficient in training. For example, if the expected output was negative and it gave some value in the positive you can train on C * ERROR and conversely if the expected output was positive and it gave some value in the negative you can train on just the error, that way false positives have more impact on the model as opposed to false negatives.

Varying learning rates could as well help, however, increasing the learning rate and increase the error have different effects because changing the error will change the direction of the gradient whereas changing the learning rate will only change the gradient's magnitude of effect on the network, two slightly different things.

(for the learning rate, split the data into two, positive and negative, then train them separately with the learning rate for negative cases larger than for positive cases)