The result of gradient descent algorithm is a vector. So how does this algorithm decide the direction for weight change? We Give hyperparameters for step size. But how is the vector direction for weight change, for the purpose of reducing the Loss function in a Linear Regression Model, determined by this algorithm?

-

Welcome to AI.se...The question requires an answer of conceptual nature which can be too lengthy, i have provided a few links in my answer, but I suggest you to explore the internet for an even better understanding of why gradient descent actually works. – Mar 14 '18 at 08:12

2 Answers

First thing is that what does gradient descent do? Gradient Descent is a tool of calculus which we use to determine the parameters (here weights) used in a Machine Learning algorithm or a Neural Network, by running the Gradient Descent algorithm iteratively.

What does the vector obtained from one iteration of gradient descent tell us? It tells us the direction of weight change (when weights are treated as a vector) for the maximum reduction in the value outputted by a loss function. The intuition behind why Gradient Descent gives such a direction can be found here:

- Why the gradient is the direction of steepest ascent - Khan Academy

- Why is gradient the direction of steepest ascent?

- Why does gradient descent work?

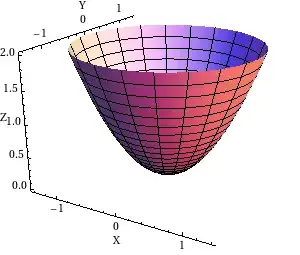

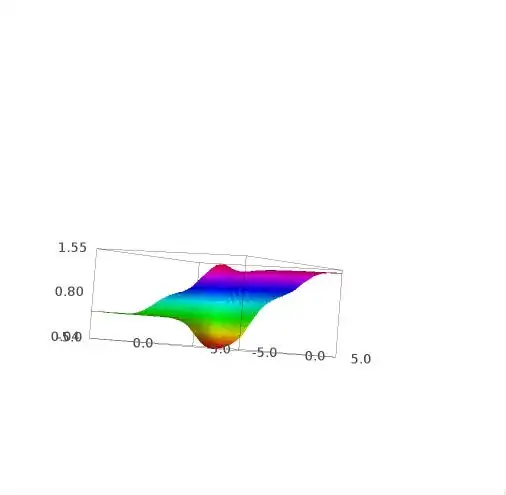

In general cases the cost/loss function is an n-dimensional paraboloid (we design function in such a way such that its convex).

A 2-D paraboloid with x and y as independent variables.

Now why do we reduce the weights in the direction of negative gradient only? Why not some other direction?

Since we want to reduce the cost in Linear Regression for better predictions we choose only the negative Gradient Descent direction, as it is the direction of steepest descent even though we could have chosen some other possible vector direction to reduce cost, but the negative gradient descent direction ensures that:

- Cost is always decreased (if the step size is correct and in general only in cases of convex functions e.g paraboloid).

- Cost is decreased by the maximal amount if we move in that direction, so we don't have to worry whether cost will decrease or not if we move in that direction.

Also we use learning rate alpha to scale by how much amount we want to decrease the weights.

EDIT: As pointed by @pasaba the error function may not be a paraboloid but in general a good cost function looks like a paraboloid with skewed axis.

-

@pasabaporaqui activation function is not the same as loss function..the equation of mse is equation of a paraboloid – Mar 16 '18 at 09:02

-

MSE is a parabola if the output of the net is taken as MSE argument, E(y), something useless in optimization of a NN. When working on optimization, the arguments to be taken into account are the weights and offsets, E( w_0, w_1, ... ), that is not a parabola, even if measured as MSE. By example, when activation function is sigmoid, It is more near to a superposition of continuous approximations of Heaviside functions. – pasaba por aqui Mar 16 '18 at 09:29

-

@pasabaporaqui i plotted the log cost function used in sigmoids and for 1 part I got 1 part of parabola on +ve x-axis and the other part gave me 2nd side of parabola on the negative x-axis....well not exactly parabola, but looked close to it....so I thought to say that cost functions are generally parabolid – Mar 16 '18 at 09:39

-

We can analyze a basic common example: approximation of AND logic gate by a NN.

The inputs to the NN will be "x1" and "x2" and its output is "y". The data to be learned by the NN is:

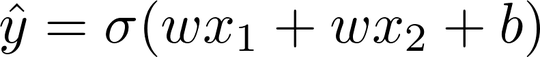

The basic NN has one intermediate cell with activation function sigmoid and one output cell identity function. That means:

(note by symmetry we assume w1=w2)

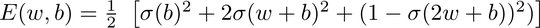

Then, the error is:

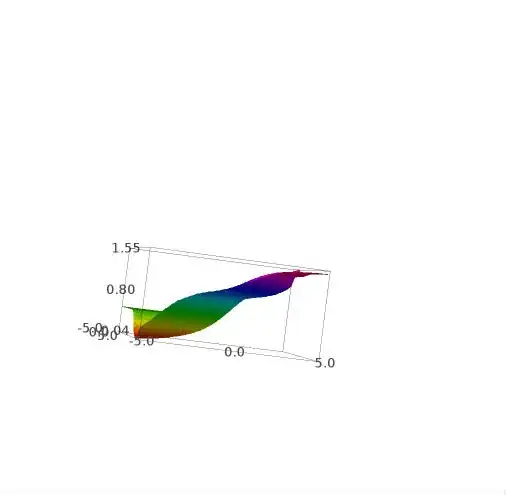

These are some plots of this function:

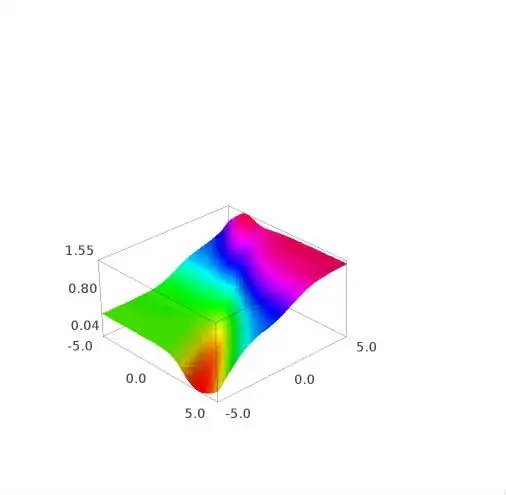

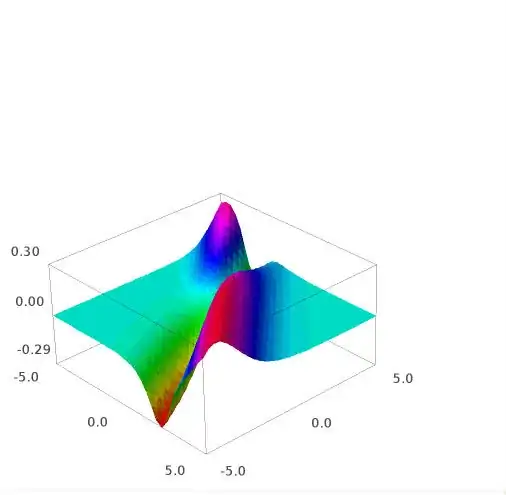

And this is the w derivate of e :

Sage Math code (link) for this graph:

s(x)=1/(1+e^(-1*x))

e(w,b)=(1/2)*(s(b)^2+2*s(w+b)^2+(1-s(2*w+b))^2)

plot3d(e(w,b),(w,-5,5),(b,-5,5),adaptive=True, color=rainbow(60, 'rgbtuple'))

edw=e.derivative(w)

plot3d(edw(w,b),(w,-5,5),(b,-5,5),adaptive=True, color=rainbow(60, 'rgbtuple'))

(PS: please, activate latex on this stack exchange).

- 1,282

- 6

- 21

-

Uh huh I never said mse for sigmoid is convex....I said the log error for sigmoid is convex, kind of paraboloid looking – Mar 16 '18 at 10:22