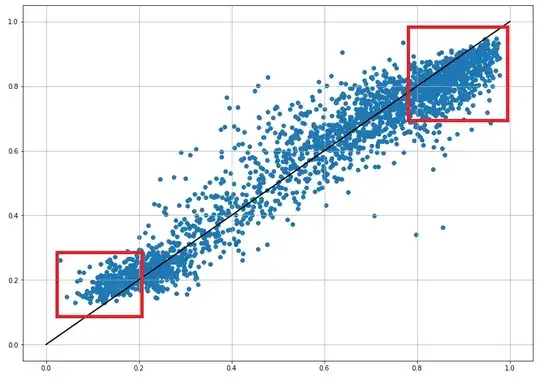

I'm training an LSTM network with multiple inputs and several LSTM layers in order to set up a time series gap filling procedure. The LSTM is trained bidirectionally with "tanh" activation on the outputs of the LSTM, and one Dense layer with "linear" activation comes at the end to predict the outputs. The following scatterplot of real outputs vs the predictions illustrates the problem:

Outputs (X-axis) vs predictions (Y-axis):

The network is definitely not performing too bad and I'll be updating the parameters in the next trials, but the issue at hand always reappears. The highest outputs are clearly underestimated, and the lowest values are overestimated, clearly systematic.

I have tried min-max scaling on inputs and outputs and normalizing inputs and outputs, and the latter performs slightly better, but the issue persists.

I've looked a lot in existing threads and Q&As, but I haven't seen something similar.

I'm wondering if anyone here sees this and immediately knows the possible cause (activation function? Preprocessing? Optimizer? Lack of weights during training? ... ?). Or, and in that case, it would also be good to know if this is impossible to find out without extensive testing.