Why is it a bad idea to have a momentum factor greater than 1? What are the mathematical motivations/reasons?

3 Answers

If gradient descent is like walking down a slope, momentum would be the literal momentum of the agent traversing the hyperplane.

Under that analogy then, momentum factor would be analogous to the friction coefficient, with 1 being max friction and 0 being no friction.

You should be able to see why there can't be friction beyond that range: if friction = 1 it would be identical to having no friction; if friction <= 0 then by conservation of energy gradient descent will not find a local minima; if friction > 1 then gradient descent would be moving backwards.

- 6,237

- 5

- 25

- 53

- 2,061

- 10

- 26

-

Will it be friction coefficient = 1? or only friction = 1 – May 21 '18 at 02:15

-

latter should be fine as the term is well understood in the literature. – k.c. sayz 'k.c sayz' Jun 05 '18 at 01:35

If you want only the answer to your question in particular, you can skip to the last part of the answer. To answer in detail momentum is a technically incorrect term, I would rather call it inertial learning.

Inertia - Inertia is the resistance of any physical object to any change in its position and state of motion.

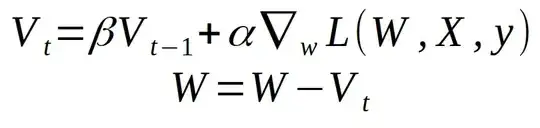

First the equation of weight change in the momentum learning method at a particular iteration is given by the equation:

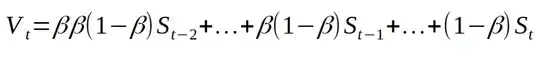

Where beta will be the momentum term. If we expand the expression we get something like:

Courtsey: Stochastic Gradient Descent with momentum

Here S_t are the gradients or dels for a particular training example. Clearly this is for a 3 example training set.

Now why do we use momentum? As @Andreas Storvik Strauman has provided a link, you can easily delve into the mathematics for its usage. But to make a more intuitive sensehere are a few points to note:

- The exponentially weighted terms can be thought of as past memory of what you learned. You don't want to forget it completely, so you keep on revising it with weight-age of revising it decreasing over time. The vector updation for an already iterated training example keeps getting smaller and smaller, whereas it is not present altogether in normal gradient descent.

- The momentum term can be thought to play a damping role, it is not allowing the new training example to have its way completely. You can visualize this by taking 2 points and a straight line, with the updation scheme directly proportional to the distance between the line and the points and then check for both normal and momentum gradient descent methods. Thus gradient descent with momentum is a damped oscillation and thus always has a higher chance of converging.

- The inertial learning also helps when you come to a point in your loss curve where slope is 0. Normal learning will result in very small weight updates in this position, but with inertial learning this position will be easily crossed.

As for your original question of why momentum term <1, here are a few points which most answers have missed:

- The first and foremost, if

beta > 1, the weightage for previous training examples will increase exponentially. (Like1.01^1000 = 20959just after 1000 iterations). That maybe handled by increasing thelearning rateaccordingly, but not only it will require a lot of extra computation, it is almost mathematically impossible. - Second, a exponential series with

r >= 1common ratio never converges. It goes on going bigger and bigger. Also, if you can draw parallels with continuous functions, this is what we call a function which is not Absolutely integrable function. - Also as per our previous intuition why would one want to give high weight-age to things which you have learned previously. It may not be even important if you follow online learning method (you look at each training example only once due to high number of training examples).

All these leads to a single conclusion if beta >= 1, there will be a large amount of oscillation and error will go on increasing exponentially (can probably be proven by rigorous mathematical analysis). Although it might wotk for beta = 1(due to the Perceptron Convergence Theorem)

Let's talk about gradient decent!

Analogy:

So you're standing on a mountain side, and you want to get to the lowest part of this mountain. You have a notepad with you.

Although actual physics-momentum would be a good analogy here, I'm not gonna use it.

You're somewhere on this mountain side and you figure out which way is down*, and you jump once a couple of meters in that direction. How big one jump is would depend on how steep the hill is (the length of the gradient), and how much extra you push with your feet. The first time you decide to not really push that much with your feet. The SGD momentum, comes here; you write down in your note pad which direction you went, and how far (e.g. south, 4 meters).

Note: here the PHYSICAL momentum would represent the length of the gradient.

You repeat this for some time you come to a place where there are only ways upwards.

Does this mean you hit the bottom? Not necessarily; you might have gotten stuck in a valley, or "local minima". You really want to get out of this valley, but all directions are upwards, so which way should you jump?

You now take out your notebook and notice that you've been jumping south east the last 40 steps, and pretty far. You then reason that it is likely that you want to go south east. So you jump south east with a lot of thrust from your feet: This is the intuition on what momentum does;

If you have a clear "pattern" of which way is down, then this should also count!

Note: the momentum only depends on the previous step, but the previous step depends on the steps before that and so on. This is just an analogy.

Maths:

For the maths, you just add a term that is the last gradient, times some constant.

Heading(t)=γ Heading(t-1)+η Gradient(t)

Where γ is the momentum factor and η is the learning rate.

Sebsastian Ruders blog on gradient descent is brilliant to learn more details of the maths of it.

γ ≷ 1

For mathematical conclusions:

Heading(t)=γ Heading(t-1)+η Gradient(t)

γ > 1: From the "expression", you could infer that this case would generate echos. That the gradient of the previous step would contribute more than the actual gradient. For the upcoming step, this effect would get enhanced, and 10 steps down the road, you're stuck going in one direction.

γ < 1 makes it "converge" to a "terminal velocity", if you like. It would depend on the preceding steps less and less instead of more and more.

These effects are pretty clear in the equation you find at Ruders blog

If your momentum term was greater than one, then the notebook would overcome the actual gradient. After a few steps, you wouldn't even look at the hill; you'd go "I've only gone east so far, so I'll just continue east" with your jumps getting longer and longer. This is not good.

In conclusion

A high momentum term would lead you in the wrong direction (blow up and always go in the same direction), and/or oscillate around the global minima (making you jump too far).

Hope it helps :)

*: Strictly speaking, we're finding the "up" direction and go the opposite way. The "up" direction is the gradient.

- 491

- 3

- 15