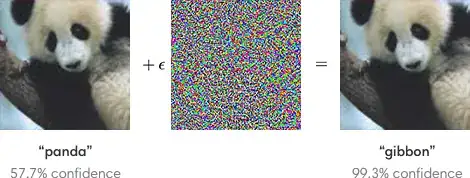

The problem of adversarial examples is known to be critical for neural networks. For example, an image classifier can be manipulated by additively superimposing a different low amplitude image to each of many training examples that looks like noise but is designed to produce specific misclassifications.

Since neural networks are applied to some safety-critical problems (e.g. self-driving cars), I have the following question

What tools are used to ensure safety-critical applications are resistant to the injection of adversarial examples at training time?

Laboratory research aimed at developing defensive security for neural networks exists. These are a few examples.

adversarial training (see e.g. A. Kurakin et al., ICLR 2017)

defensive distillation (see e.g. N. Papernot et al., SSP 2016)

MMSTV defence (Maudry et al., ICLR 2018).

However, do industrial-strength, production-ready defensive strategies and approaches exist? Are there known examples of applied adversarial-resistant networks for one or more specific types (e.g. for small perturbation limits)?

There are already (at least) two questions related to the problem of hacking and fooling of neural networks. The primary interest of this question, however, is whether any tools exist that can defend against some adversarial example attacks.