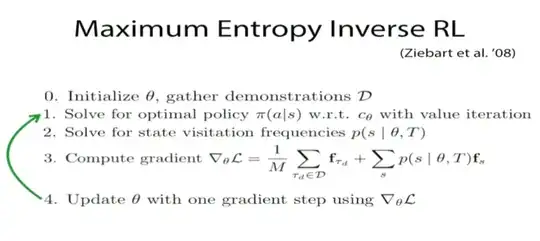

Here's the general algorithm of maximum entropy inverse reinforcement learning.

This uses a gradient descent algorithm. The point that I do not understand is there is only a single gradient value $\nabla_\theta \mathcal{L}$, and it is used to update a vector of parameters. To me, it does not make sense because it is updating all elements of a vector with the same value $\nabla_\theta \mathcal{L}$. Can you explain the logic behind updating a vector with a single gradient?