I'm training an auto-encoder network with Adam optimizer (with amsgrad=True) and MSE loss for Single channel Audio Source Separation task. Whenever I decay the learning rate by a factor, the network loss jumps abruptly and then decreases until the next decay in learning rate.

I'm using Pytorch for network implementation and training.

Following are my experimental setups:

Setup-1: NO learning rate decay, and

Using the same Adam optimizer for all epochs

Setup-2: NO learning rate decay, and

Creating a new Adam optimizer with same initial values every epoch

Setup-3: 0.25 decay in learning rate every 25 epochs, and

Creating a new Adam optimizer every epoch

Setup-4: 0.25 decay in learning rate every 25 epochs, and

NOT creating a new Adam optimizer every time rather

using PyTorch's "multiStepLR" and "ExponentialLR" decay scheduler

every 25 epochs

I am getting very surprising results for setups #2, #3, #4 and am unable to reason any explanation for it. Following are my results:

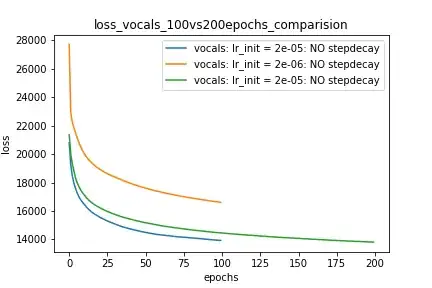

Setup-1 Results:

Here I'm NOT decaying the learning rate and

I'm using the same Adam optimizer. So my results are as expected.

My loss decreases with more epochs.

Below is the loss plot this setup.

Plot-1:

optimizer = torch.optim.Adam(lr=m_lr,amsgrad=True, ...........)

for epoch in range(num_epochs):

running_loss = 0.0

for i in range(num_train):

train_input_tensor = ..........

train_label_tensor = ..........

optimizer.zero_grad()

pred_label_tensor = model(train_input_tensor)

loss = criterion(pred_label_tensor, train_label_tensor)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_history[m_lr].append(running_loss/num_train)

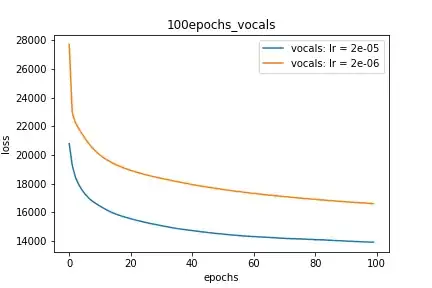

Setup-2 Results:

Here I'm NOT decaying the learning rate but every epoch I'm creating a new

Adam optimizer with the same initial parameters.

Here also results show similar behavior as Setup-1.

Because at every epoch a new Adam optimizer is created, so the calculated gradients

for each parameter should be lost, but it seems that this doesnot affect the

network learning. Can anyone please help on this?

Plot-2:

for epoch in range(num_epochs):

optimizer = torch.optim.Adam(lr=m_lr,amsgrad=True, ...........)

running_loss = 0.0

for i in range(num_train):

train_input_tensor = ..........

train_label_tensor = ..........

optimizer.zero_grad()

pred_label_tensor = model(train_input_tensor)

loss = criterion(pred_label_tensor, train_label_tensor)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_history[m_lr].append(running_loss/num_train)

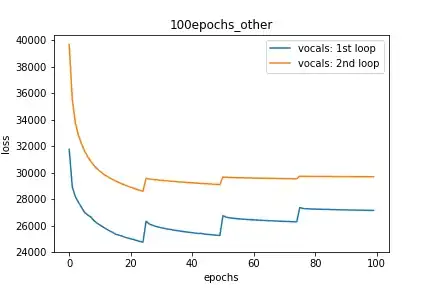

Setup-3 Results:

As can be seen from the results in below plot,

my loss jumps every time I decay the learning rate. This is a weird behavior.

If it was happening due to the fact that I'm creating a new Adam

optimizer every epoch then, it should have happened in Setup #1, #2 as well.

And if it is happening due to the creation of a new Adam optimizer with a new

learning rate (alpha) every 25 epochs, then the results of Setup #4 below also

denies such correlation.

Plot-3:

decay_rate = 0.25

for epoch in range(num_epochs):

optimizer = torch.optim.Adam(lr=m_lr,amsgrad=True, ...........)

if epoch % 25 == 0 and epoch != 0:

lr *= decay_rate # decay the learning rate

running_loss = 0.0

for i in range(num_train):

train_input_tensor = ..........

train_label_tensor = ..........

optimizer.zero_grad()

pred_label_tensor = model(train_input_tensor)

loss = criterion(pred_label_tensor, train_label_tensor)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_history[m_lr].append(running_loss/num_train)

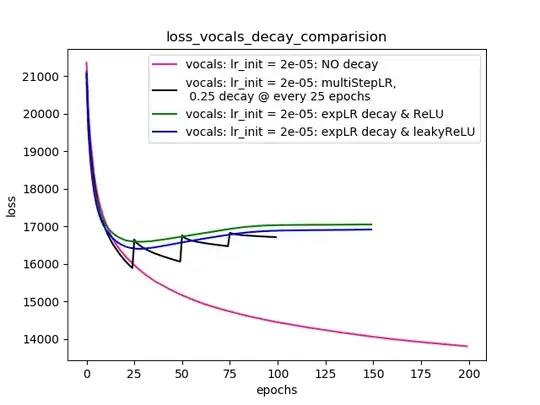

Setup-4 Results:

In this setup, I'm using Pytorch's learning-rate-decay scheduler (multiStepLR)

which decays the learning rate every 25 epochs by 0.25.

Here also, the loss jumps everytime the learning rate is decayed.

As suggested by @Dennis in the comments below, I tried with both ReLU and 1e-02 leakyReLU nonlinearities. But, the results seem to behave similar and loss first decreases, then increases and then saturates at a higher value than what I would achieve without learning rate decay.

Plot-4 shows the results.

Plot-4:

scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer=optimizer, milestones=[25,50,75], gamma=0.25)

scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer=optimizer, gamma=0.95)

scheduler = ......... # defined above

optimizer = torch.optim.Adam(lr=m_lr,amsgrad=True, ...........)

for epoch in range(num_epochs):

scheduler.step()

running_loss = 0.0

for i in range(num_train):

train_input_tensor = ..........

train_label_tensor = ..........

optimizer.zero_grad()

pred_label_tensor = model(train_input_tensor)

loss = criterion(pred_label_tensor, train_label_tensor)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_history[m_lr].append(running_loss/num_train)

EDITS:

- As suggested in the comments and reply below, I've made changes to my code and trained the model. I've added the code and plots for the same.

- I tried with various

lr_schedulerinPyTorch (multiStepLR, ExponentialLR)and plots for the same are listed inSetup-4as suggested by @Dennis in comments below. - Trying with leakyReLU as suggested by @Dennis in comments.

Any help. Thanks