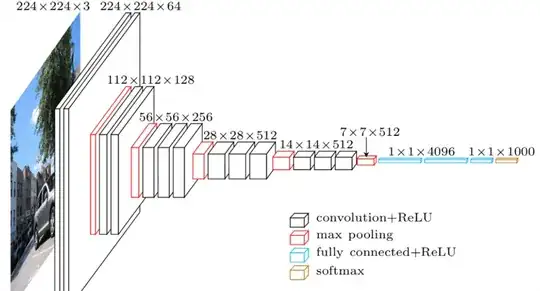

I found the below image of how a CNN works

But I don't really understand it. I think I do understand CNNs, but I find this diagram very confusing.

My simplified understanding:

- Features are selected

Convolution is carried out so that to see where these features fit (repeated with every feature, in every position)

Pooling is used to shrink large images down (select the best fit feature).

ReLU is used to remove your negatives

Fully-connected layers contribute weighted votes towards deciding what class the image should be in.

These are added together, and you have your % chance of what class the image is.

Confusing points of this image to me:

Why are we going from one image of $224 \times 224 \times 3$ to two images of $224 \times 224 \times 64$? Why does this halving continue? What is this meant to represent?

It continues on to $56 \times 56 \times 256$. Why does this number continue to halve, and the number, at the end, the $256$, continues to double?