Let's start by looking at:

$$\max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ G_{t:t+n} \mid S_t = s \right] - v_{\pi}(s) \Bigr\rvert.$$

We can rewrite this by plugging in the definition of $G_{t:t+n}$:

\begin{aligned}

& \max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ G_{t:t+n} \mid S_t = s \right] - v_{\pi}(s) \Bigr\rvert \\

%

=& \max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ R_{t + 1} + \gamma R_{t + 2} + \dots + \gamma^{n - 1} R_{t + n} + \gamma^n V_{t + n - 1}(S_{t + n}) \mid S_t = s \right] - v_{\pi}(s) \Bigr\rvert \\

%

=& \max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ R_{t:t+n} + \gamma^n V_{t + n - 1}(S_{t + n}) \mid S_t = s \right] - v_{\pi}(s) \Bigr\rvert,

\end{aligned}

where $R_{t:t+n} \doteq R_{t + 1} + \gamma R_{t + 2} + \dots + \gamma^{n - 1} R_{t + n}$.

If you go all the way back to page 58 of the book, you can see the definition of $v_{\pi}(s)$:

\begin{aligned}

v_{\pi}(s) &\doteq \mathbb{E}_{\pi} \left[ \sum_{k = 0}^{\infty} \gamma^k R_{t + k + 1} \mid S_t = s \right] \\

%

&= \mathbb{E}_{\pi} \left[ R_{t:t+n} + \gamma^n \sum_{k = 0}^{\infty} \gamma^k R_{t + n + k + 1} \mid S_t = s \right] \\

%

&= \mathbb{E}_{\pi} \left[ R_{t:t+n} \mid S_t = s \right] + \gamma^n \mathbb{E}_{\pi} \left[ \sum_{k = 0}^{\infty} \gamma^k R_{t + n + k + 1} \mid S_t = s \right]

\end{aligned}

Using this, we can continue rewriting where we left off above:

\begin{aligned}

& \max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ R_{t:t+n} + \gamma^n V_{t + n - 1}(S_{t + n}) \mid S_t = s \right] - v_{\pi}(s) \Bigr\rvert \\

%

=& \max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ \gamma^n V_{t + n - 1}(S_{t + n}) \mid S_t = s \right] - \gamma^n \mathbb{E}_{\pi} \left[ \sum_{k = 0}^{\infty} \gamma^k R_{t + n + k + 1} \mid S_t = s \right] \Bigr\rvert \\

%

=& \gamma^n \max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ V_{t + n - 1}(S_{t + n}) - \sum_{k = 0}^{\infty} \gamma^k R_{t + n + k + 1} \mid S_t = s \right] \Bigr\rvert

\end{aligned}

Because the absolute value function is convex, we can use Jensen's inequality to show that the absolute value of an expectation is less than or equal to the expectation of the corresponding absolute value:

$$\left| \mathbb{E} \left[ X \right] \right| \leq \mathbb{E} \left[ \left| X \right| \right].$$

This means that:

\begin{aligned}

\max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ G_{t:t+n} - v_{\pi}(s) \mid S_t = s \right] \Bigr\rvert &\leq \gamma^n \max_s \mathbb{E}_{\pi} \left[ \Bigl\lvert V_{t + n - 1}(S_{t + n}) - \sum_{k = 0}^{\infty} \gamma^k R_{t + n + k + 1} \Bigr\rvert \mid S_t = s \right] \\

%

\end{aligned}

Now, the important trick here is to see that:

$$\max_s \mathbb{E}_{\pi} \left[ \Bigl\lvert V_{t + n - 1}(S_{t + n}) - \sum_{k = 0}^{\infty} \gamma^k R_{t + n + k + 1} \Bigr\rvert \mid S_t = s \right] \leq \max_s \mathbb{E}_{\pi} \left[ \Bigl\lvert V_{t + n - 1}(S_{t}) - \sum_{k = 0}^{\infty} \gamma^k R_{t + k + 1} \Bigr\rvert \mid S_t = s \right]$$

I'm skipping the formal steps to show that this is the case to save space, but the intuition is that:

- The left-hand side of this inequality involves finding an $S_t = s$ such that some function of $S_{t + n}$ is maximized, whereas the right-hand side involves finding an $S_t = s$ such that exactly the same function of $S_{t}$ is maximized.

- In the left-hand side, selecting an $S_t = s$ implicitly induces a probability distribution over multiple possible states $S_{t + n}$, given by $S_t$, the environment's transition dynamics, and the policy $\pi$. Intuitively, this is more "restrictive" for the $\max$ operator, it does not have the "freedom" to directly select a single state $S_{t + n}$ such that the function of $S_{t + n}$ is maximized. The right-hand side is free to choose any single state $S_t = s$ such that $S_t$ in the right-hand side were equal to an "optimal" $S_{t+n}$ on the left-hand side, but it is also free to make even better choices which might never be uniquely reachable after $n$ steps on the left-hand side.

We can use this to rewrite the previous inequality we had (where we might be making the right-hand side a bit bigger than it was, but that's fine, it already was an upper bound anyway so that inequality will still hold):

\begin{aligned}

\max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ G_{t:t+n} - v_{\pi}(s) \mid S_t = s \right] \Bigr\rvert &\leq \gamma^n \max_s \mathbb{E}_{\pi} \left[ \Bigl\lvert V_{t + n - 1}(S_{t}) - \sum_{k = 0}^{\infty} \gamma^k R_{t + k + 1} \Bigr\rvert \mid S_t = s \right] \\

%

&= \gamma^n \max_s \mathbb{E}_{\pi} \left[ \Bigl\lvert V_{t + n - 1}(S_{t}) - v_{\pi}(s) \Bigr\rvert \mid S_t = s \right].

\end{aligned}

After this rewriting we've got a hidden $\mathbb{E}_{\pi}$ "inside" another $\mathbb{E}_{\pi}$ (because the definition of $v_{\pi}(s)$ contains an $\mathbb{E}_{\pi}$), which I suppose is kind of ugly... but mathematically meaningless.

The maximum of a random variable is an upper bound on the expectation of that random variable, so we can get rid of the expectation in the right-hand side (again potentially increasing the right-hand side, which again is still fine since it's already an upper bound anyway):

\begin{aligned}

\max_s \Bigl\lvert \mathbb{E}_{\pi} \left[ G_{t:t+n} - v_{\pi}(s) \mid S_t = s \right] \Bigr\rvert &\leq \gamma^n \max_s \Bigl\lvert V_{t + n - 1}(s) - v_{\pi}(s) \Bigr\rvert,

\end{aligned}

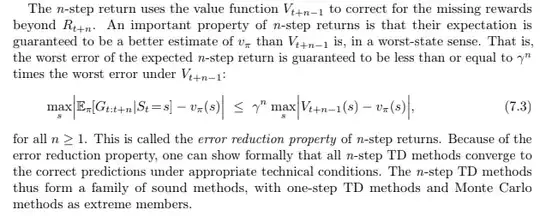

which we can finally rewrite to Equation (7.3) in the book by moving the subtraction of $v_{\pi}(s)$ outside of the expectation on the left-hand side of the inequality (which is fine because, as I already mentioned above, the definition of $v_{\pi}(s)$ itself contains another $\mathbb{E}_{\pi}$ anyway).