I have a steady hex-map and turn-based wargame featuring WWII carrier battles.

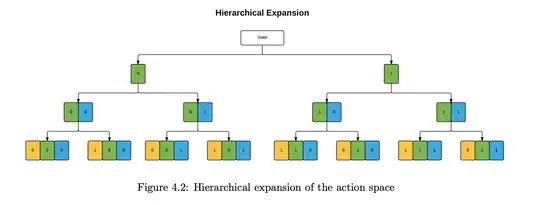

On a given turn, a player may choose to perform a large number of actions. Actions can be of many different types, and some actions may be performed independently of each other while others have dependencies. For example, a player may decide to move one or two naval units, then assign a mission to an air unit or not, then adjust some battle parameters or not, and then reorganize a naval task force or not.

Usually, boardgames allow players to perform only one action each turn (e.g. go or chess) or a few very similar actions (backgammon).

Here the player may select

- Several actions

- The actions are of different nature

- Each action may have parameters that the player must set (e.g. strength, payload, destination)

How could I approach this problem with reinforcement learning? How would I specify a model or train it effectively to play such a game?

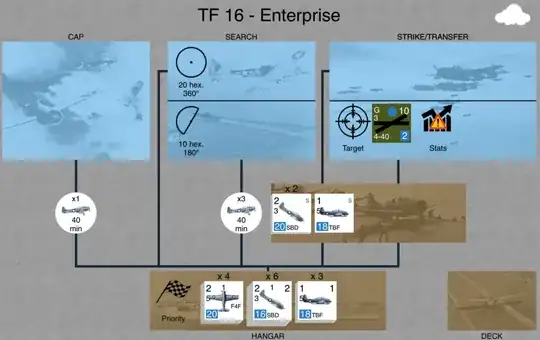

Here is a screenshot of the game.

Here's another.