I'm trying to understand how the dimensions of the feature maps produced by the convolution are determined in a ConvNet.

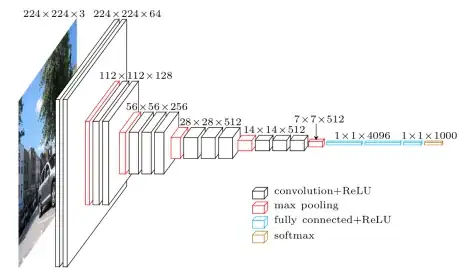

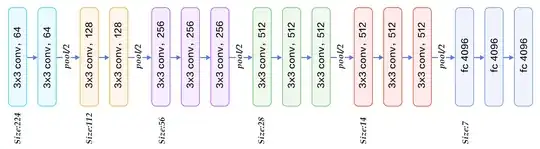

Let's take, for instance, the VGG-16 architecture. How do I get from 224x224x3 to 112x112x64? (The 112 is understandable, it's the last part I don't get)

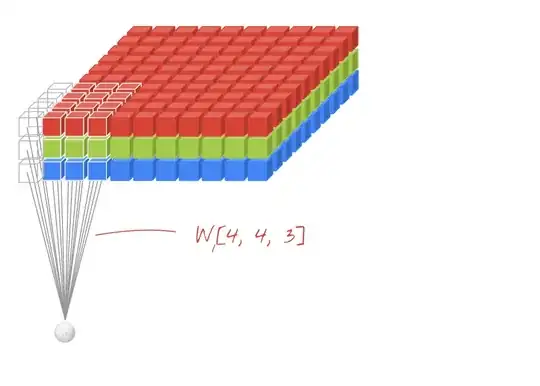

I thought the CNN was to apply filters/convolutions to layers (for instance, 10 different filters to channel red, 10 to green: are they the same filters between channels ?), but, obviously, 64 is not divisible by 3.

And then, how do we get from 64 to 128? Do we apply new filters to the outputs of the previous filters? (in this case, we only have 2 filters applied to previous outputs) Or is it something different?