Deleting /var/log is probably a bad idea, but deleting the individual logfiles should be OK.

On my laptop, with a smallish SSD disk, I set up /var/log (and /tmp and /var/tmp) as tmpfs mount points, by adding the following lines to /etc/fstab:

temp /tmp tmpfs rw,mode=1777 0 0

vartmp /var/tmp tmpfs rw,mode=1777 0 0

varlog /var/log tmpfs rw,mode=1777 0 0

This means that nothing in those directories survives a reboot. As far as I can tell, this setup works just fine. Of course, I lose the ability to look at old logs to diagnose any problems that might occur, but I consider that a fair tradeoff for the reduced disk usage.

The only problem I've had is that some programs (most notably APT) want to write their logs into subdirectories of /var/log and aren't smart enough to create those directories if they don't exist. Adding the line mkdir /var/log/apt into /etc/rc.local fixed that particular problem for me; depending on just what software you have installed, you may need to create some other directories too.

(Another possibility would be to create a simple tar archive containing just the directories, and to untar it into /var/log at startup to create all the needed directories and set their permissions all at once.)

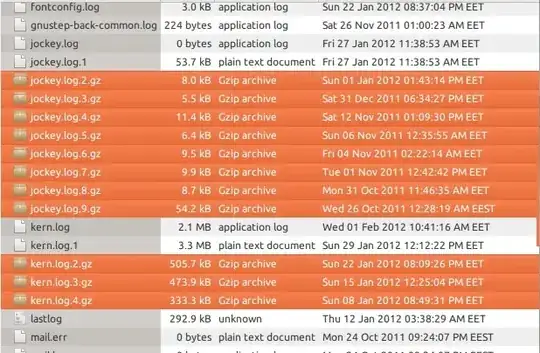

find /var/log -type f -name "*.gz" -delete, I removed the compressed files and I only freed around 1 GB of space. Isn't 50 GB enough for the/dir and the rest of my disk for/home! – Muhammad Gelbana Feb 02 '14 at 06:56cat /var/log/kern.logornano /var/log/kern.log(at the GUI, run something like e.g.gedit /var/log/kern.logormousepad /var/log/kern.log) and check what may be the problem. Once you figure out what's wrong you can then runsudo rm /var/log/kern.log ; sudo telinit 6in order to delete such (big) file and restart the operating system. – Yuri Sucupira Mar 22 '17 at 04:35messages(7.7 GB),user.log(7.7 GB),syslog(4.1 GB) andsyslog.1(3.5 GB). Those four files sum 23 GB. Any way to remove them, or at least reduce their size? – Rodrigo Sep 11 '17 at 18:39cat /var/log/kern.log) the whole log file – Ismail May 28 '18 at 07:44caton a big file is gonna take a lot of time, but if such file is the only source of information you got about the issue that's affecting your OS then it might be the only way. Anyway, if one alternatively prefers to delete the log file except its last N lines, one can run a command like this:tail -N /var/log/kern.log |sudo tee /var/log/kernel.log. If e.g. one wants to keep only the last 1000 lines, just runtail -1000 /var/log/kern.log |sudo tee /var/log/kernel.logand the kernel log will be downsized to a file having only its last 1000 lines. – Yuri Sucupira May 28 '18 at 12:55tailis to preserve thekern.logfile while the user doesn't figure out what's wrong with the system. Such user may usegrepintokernel.log's last N lines with a command such astail -N /var/log/kern.log |grep -i wordwhere N is the last N lines of thekern.logfile andwordis a word the user suspects (s)he may find into such lines, about the issue affecting the system. Last but not least,tail -N /var/log/kern.log |sudo tee /var/log/analysis.logwill createanalysis.log, which has only the last N lines ofkernel.log. – Yuri Sucupira May 28 '18 at 13:01tailas I explained above), a good "everyday use" app for cleaning the system is Bleachbit (check https://www.bleachbit.org), which you can install withsudo apt-get install bleachbit -y. – Yuri Sucupira May 28 '18 at 13:06catisn't the only simplified tool, there are multiple ways of doing this. It was just a simple suggestion that you shouldn't recommend people to use cat, uselessinstead. Yes for a big file it's smarter to usetailthancatsince cat outputs everything and only the last x lines(dependent on your terminal settings) will be visible. – Ismail May 29 '18 at 08:30less(andmore) in some contexts, but because the OP / question refers to a massively big log file I would never useless: in such context, usinglesswould cause it to take forever to find anything relevant inside such massive log file. Anyway, you're right abouttail, it is smarter than usingcat. ^..^ – Yuri Sucupira May 30 '18 at 00:35