I have 280 thousand photos to delete in a folder, but some videos to keep.

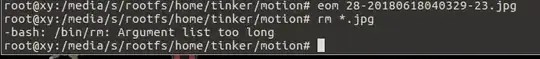

In the folder, I gave the command: #rm *.jpg, but I get "argument list too long". When I create an argument to delete some of the photos, it works on a smaller set, like this: # rm 104-*.jpg.

How can I efficiently delete all the JPEG files in a directory without getting the message "Argument list too long"?

#rm -f *.jpg gives the same message.

Opening the folder in Caja uses too much memory and crashes. I am using Ubuntu MATE.