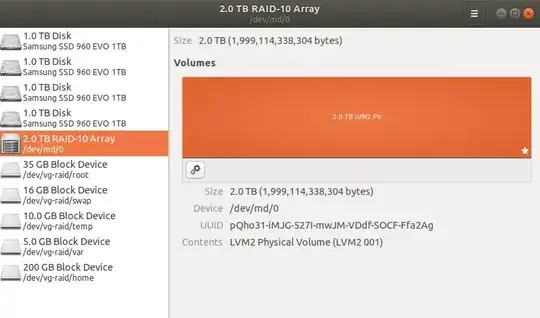

I've been struggling for hours on how to successfully mount my Raid partition. I'm using Ubuntu 18.04 and have created a 2.0TB Raid-10 array (using 4 x 1.0TB SSDs) as shown here.

I do not have deep technical knowledge but why cannot I not access the remaining volume.

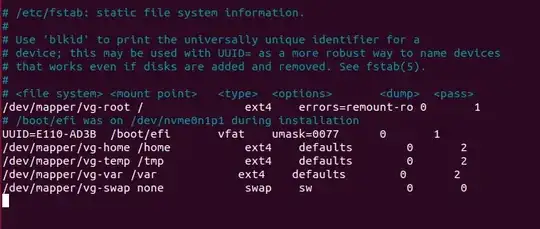

If I run sudo lvscan I get:

ACTIVE '/dev/vg/swap' [<14.90 GiB] inherit

ACTIVE '/dev/vg/root' [32.59 GiB] inherit

ACTIVE '/dev/vg/temp' [9.31 GiB] inherit

ACTIVE '/dev/vg/var' [<4.66 GiB] inherit

ACTIVE '/dev/vg/home' [186.26 GiB] inherit

Further, df -h shows:

Filesystem Size Used Avail Use% Mounted on

udev 16G 0 16G 0% /dev

tmpfs 3.2G 2.3M 3.2G 1% /run

/dev/mapper/vg-root 32G 5.1G 26G 17% /

tmpfs 16G 252M 16G 2% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 16G 0 16G 0% /sys/fs/cgroup

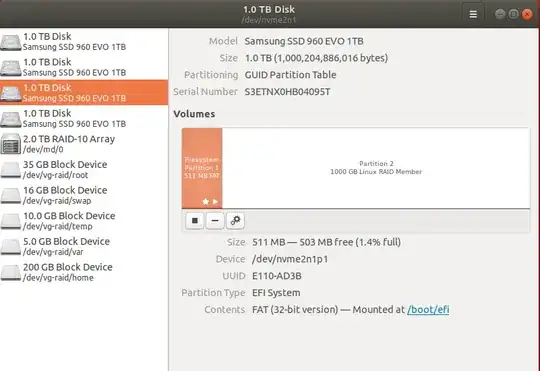

/dev/nvme2n1p1 487M 6.1M 480M 2% /boot/efi

/dev/mapper/vg-home 183G 1.6G 172G 1% /home

/dev/mapper/vg-var 4.6G 1.9G 2.5G 43% /var

/dev/mapper/vg-temp 9.2G 96M 8.6G 2% /tmp

tmpfs 3.2G 88K 3.2G 1% /run/user/1000

/dev/loop0 54M 54M 0 100% /snap/core18/719

/dev/loop1 91M 91M 0 100% /snap/core/6405

/dev/loop2 35M 35M 0 100% /snap/gtk-common-themes/1122

/dev/loop3 170M 170M 0 100% /snap/gimp/113

/dev/loop4 147M 147M 0 100% /snap/chromium/595

And sudo vgscan shows

Reading volume groups from cache.

Found volume group "vg" using metadata type lvm2

Running sudo pvscan I see the below which is exactly what I would like to access

PV /dev/md0 VG vg lvm2 [<1.82 TiB / <1.58 TiB free]

Total: 1 [<1.82 TiB] / in use: 1 [<1.82 TiB] / in no VG: 0 [0 ]

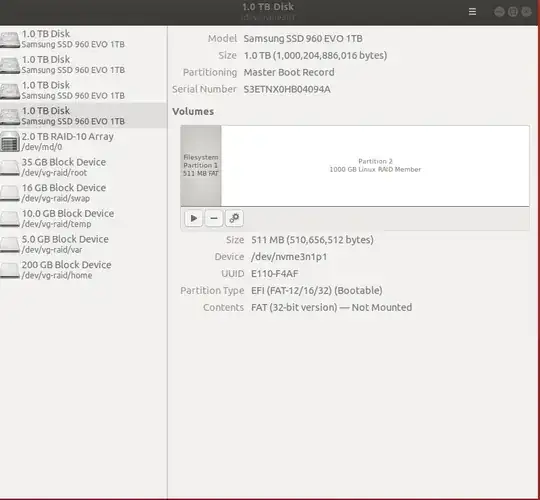

Any ideas what I have done wrong here, appears I only have access to about <250gb of storage. Here is how the individual drives have been partitioned:

I've just noticed that there is only one SSD that is mounted at /boot/efi, while the others are like the image directly above.

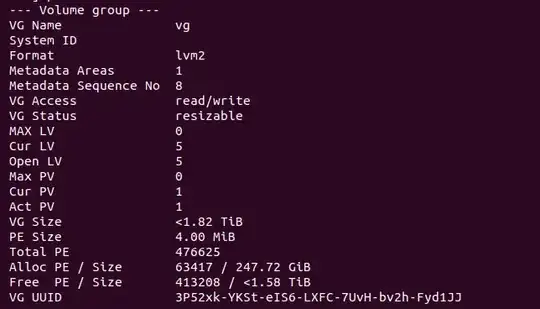

/dev/md0under control of LVM, you wouldn't mount the RAID directly but rather manage available space through LVM and mount the resulting logical volumes like it is done with /, /home, /tmp ... already. Usingvgdisplayshould reveal how much space is left in the volume group. And depending on what you actually want to achieve, you can create a new logical volume that can be mounted then or grow one or more of the existing ones. – Thomas Feb 16 '19 at 13:24sudo vgdisplay. – Michael Hampton Feb 16 '19 at 15:15