On my server (Ubuntu 18.04 LTS) I am running a KVM virtual machine which has been running fine for over a year or so but recently - probably because of some update - the virtual machine would lose connectivity to the network whenever the host is rebooted. I somehow managed to restore connectivity the last two times it happened but this time I just can't get it to work anymore.

I have read a lot of tutorials and other webpages and it feels like tried everything more than once, but - obviously - I must be missing something. There are just too many variable involved at the same time and many of them probably influence each other. So with this question, I'd like to find the best troubleshooting strategy that will allow me (and others) to effectively narrow down the source of connectivity issues as far as possible. More specifically, I'm talking about connectivity issues on KVM virtual machines that are connected via a bridge on Ubuntu 18.04.

I realize that this question has become extremely long, so let me clarify that you can answer the question without having read further than here.

Under the headings below, I'm mentioning my most important areas of uncertainty that need to be navigated when troubleshooting network issues but there is no need to discuss these in any detail in the answers. Take them as possible starting points.

If you prefer to take the config of a specific machine as your point of departure, scroll down to the bottom where I provide such details (under the My Example heading).

netplan

One problem with troubleshooting this on 18.04 is that ubuntu changed to using netplan, which renders a lot of currently available advice obsolete.

The switch to netplan is also a source of confusion in itself because, from what I understand, using netplan entails that all network configuration is done in /etc/netplan/*.yaml and no longer in /etc/network/interfaces, yet when I comment out all content in /etc/network/interfaces, it seems to be written back somehow (possibly by myself via Virtual Machine Manager on Gnome desktop).

It looks like I'm not the only one frustrated with netplan and some recommend switching back to ifupdown, but in order to limit the scope of this question, let's stay within netplan and try to fix things without switching back.

NetworkManager vs systemd-networkd

Another difficulty is that there is at least one relevant difference between Ubuntu 18.04 Server and Ubuntu 18.04 Desktop: server uses systemd-networkd and desktop uses NetworkManager, which entails different troubleshooting paths. To make things worse: what if you originally installed the server edition but later added gnome desktop? (I don't recall what I did, but chances are that that is what I did because my /etc/netplan/01-netcfg.yaml says renderer: networkd while I NetworkManager also seems to be running by default.)

Fix host or vm?

My third area of uncertainty is whether I should be fixing stuff on the host or the virtual machine (or when a change on the host also requires a change on the client). I have so far not paid much attention to the vm, given that it was working fine and I never updated it (except for automatic ubuntu security updates). But the last time I managed to fix the connectivity problem, I did so by duplicating its harddrive and creating a new vm (in my eyes identical) with it. I guess this confirms that the config on the vm was fine (since the vms hardware is configured on the host), but it nevertheless told me that I probably need to pay more attention to the vm's hardware configuration.

Reboot needed to apply changes?

When trying out different fixes, I am also often unsure whether the

Conflicting UIs?

Finally, I realized that it may matter through which UI I make certain changes because they may be writing these changes to different places. I currently have the following interfaces for configuring my vm:

- command line (mostly used)

- Virtual Machine Manager (GUI)

- Wok / Kimchi (web-interface)

- I also have Webmin with Cloudmin running, but my vm is not showing up there, so I'm not currently using it.

My example

So, although my idea here is to find a somewhat generic troubleshooting strategy, I suppose it is always a good idea to start from a concrete example. So here are some details about my current setup (will add more if requested in comments):

This is my current /etc/netplan/01-netcfg.yaml (and I have no other yaml files in that directory):

network:

version: 2

renderer: NetworkManager

ethernets:

enp0s31f6:

dhcp4: no

bridges:

br0:

interfaces: [ enp0s31f6]

dhcp4: yes

dhcp6: yes

The only reason I'm using NetworkManager is because I've been trying so hard with systemd-networkd without success, that I thought I'd give NetworkManager a chance (but my hunch is that I should be sticking with systemd-networkd). So, accordingly, I set managed=true in my /etc/NetworkManager/NetworkManager.conf which now looks like this:

[main]

plugins=ifupdown,keyfile

[ifupdown]

managed=true

[device]

wifi.scan-rand-mac-address=no

virsh net-list --all gives me this:

Name State Autostart Persistent

----------------------------------------------------------

br0 active yes yes

bridged inactive yes yes

default active yes yes

The bridge I'm trying to use with my vm is br0.

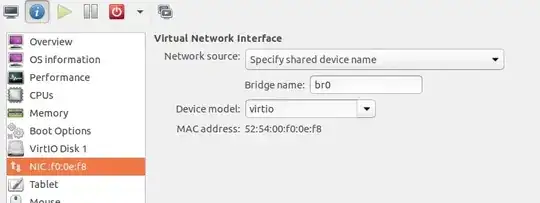

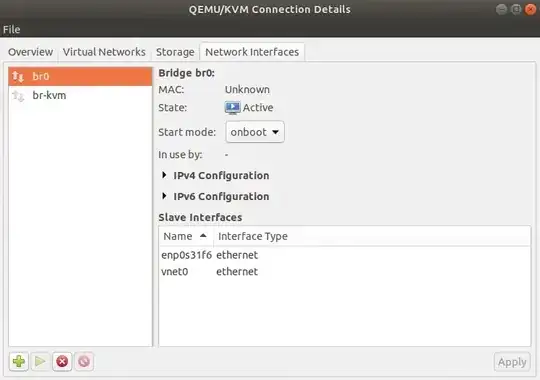

Here is the config of br0:

The second bridge was an attempt to start over and simply create a new bridge and connect the vm to that, but adding the bridge had no effect, probably because Virtual Machine Manager seems to write that into /etc/network/interfaces rather than a yaml file in /etc/netplan/

Here is my /etc/network/interfaces:

##auto lo br0

##iface lo inet loopback

##auto br1

##iface br1 inet dhcp

bridge_ports enp0s31f6

bridge_stp on

bridge_fd 0.0

##iface br0 inet dhcp

bridge_ports enp0s31f6

auto br0

iface br0 inet dhcp

bridge_ports enp0s31f6

bridge_stp on

bridge_fd 0.0

auto br-kvm

iface br-kvm inet dhcp

bridge_ports enp0s31f6

bridge_stp on

bridge_fd 0.0

Note how I commented out everything (to make sure that this file was not somehow affecting my config) only to have it added back at the bottom, as mentioned above.

ifconfig gives me a long list of bridges (most named something like br-a5ffb2301edc) of which I have no idea where they come from (I guess I unknowingly created them in my countless hours of testing). I wont paste them all here, only br0 and the actual ethernet interface:

br0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.4 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::4e52:62ff:fe09:7e59 prefixlen 64 scopeid 0x20<link>

ether 4c:52:62:09:7e:59 txqueuelen 1000 (Ethernet)

RX packets 806319 bytes 84505505 (84.5 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 307846 bytes 845321927 (845.3 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

enp0s31f6: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether 4c:52:62:09:7e:59 txqueuelen 1000 (Ethernet)

RX packets 817196 bytes 101316866 (101.3 MB)

RX errors 0 dropped 13 overruns 0 frame 0

TX packets 821152 bytes 876709681 (876.7 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device interrupt 16 memory 0xef000000-ef020000

This is how I have been testing network connectivity on my vm:

$ping 8.8.8.8

connect: Network is unreachable

Edit: Here is the content of the vm's /etc/netplan/50-cloud-init.yaml:

network:

version: 2

# renderer: networkd

ethernets:

ens3:

addresses: []

dhcp4: true

dhcp6: false

optional: true

I cannot recall why I - months ago - commented out the redererer line (nor do I know what default renderer is assumed now), but this exact config has worked.

I can also mention that it occurred to me that cloud-init might be messing things up for me (on the host) so that I checked /var/log/cloud-init-output.log to see whether it was doing anything:

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 running 'modules:config' at Fri, 21 Feb 2020 02:24:08 +0000. Up 50.91 seconds.

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 running 'modules:final' at Fri, 21 Feb 2020 02:24:15 +0000. Up 56.59 seconds.

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 finished at Fri, 21 Feb 2020 02:24:15 +0000. Datasource DataSourceNoCloud [seed=/var/lib/cloud/seed/nocloud-net][dsmode=net]. Up 56.76 seconds

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 running 'init-local' at Fri, 21 Feb 2020 02:59:28 +0000. Up 10.48 seconds.

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 running 'init' at Fri, 21 Feb 2020 03:04:29 +0000. Up 311.21 seconds.

ci-info: +++++++++++++++++++++++++++++++++++++++++Net device info+++++++++++++++++++++++++++++++++++++++++

ci-info: +-----------+-------+------------------------------+---------------+--------+-------------------+

ci-info: | Device | Up | Address | Mask | Scope | Hw-Address |

ci-info: +-----------+-------+------------------------------+---------------+--------+-------------------+

ci-info: | br-kvm | False | . | . | . | f2:7a:46:82:f9:e0 |

ci-info: | br0 | True | 192.168.1.4 | 255.255.255.0 | global | 4c:52:62:09:7e:59 |

ci-info: | br0 | True | fe80::4e52:62ff:fe09:7e59/64 | . | link | 4c:52:62:09:7e:59 |

ci-info: | enp0s31f6 | True | . | . | . | 4c:52:62:09:7e:59 |

ci-info: | lo | True | 127.0.0.1 | 255.0.0.0 | host | . |

ci-info: | lo | True | ::1/128 | . | host | . |

ci-info: +-----------+-------+------------------------------+---------------+--------+-------------------+

ci-info: +++++++++++++++++++++++++++++Route IPv4 info+++++++++++++++++++++++++++++

ci-info: +-------+-------------+-------------+---------------+-----------+-------+

ci-info: | Route | Destination | Gateway | Genmask | Interface | Flags |

ci-info: +-------+-------------+-------------+---------------+-----------+-------+

ci-info: | 0 | 0.0.0.0 | 192.168.1.1 | 0.0.0.0 | br0 | UG |

ci-info: | 1 | 169.254.0.0 | 0.0.0.0 | 255.255.0.0 | br0 | U |

ci-info: | 2 | 192.168.1.0 | 0.0.0.0 | 255.255.255.0 | br0 | U |

ci-info: +-------+-------------+-------------+---------------+-----------+-------+

ci-info: +++++++++++++++++++Route IPv6 info+++++++++++++++++++

ci-info: +-------+-------------+---------+-----------+-------+

ci-info: | Route | Destination | Gateway | Interface | Flags |

ci-info: +-------+-------------+---------+-----------+-------+

ci-info: | 1 | fe80::/64 | :: | br0 | U |

ci-info: | 3 | local | :: | br0 | U |

ci-info: | 4 | ff00::/8 | :: | br0 | U |

ci-info: +-------+-------------+---------+-----------+-------+

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 running 'modules:config' at Fri, 21 Feb 2020 03:04:33 +0000. Up 315.26 seconds.

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 running 'modules:final' at Fri, 21 Feb 2020 03:04:39 +0000. Up 321.85 seconds.

Cloud-init v. 19.4-33-gbb4131a2-0ubuntu1~18.04.1 finished at Fri, 21 Feb 2020 03:04:40 +0000. Datasource DataSourceNoCloud [seed=/var/lib/cloud/seed/nocloud-net][dsmode=net]. Up 322.15 seconds

Seeing that it was active I disabled it with sudo touch /etc/cloud/cloud-init.disabled. But my connectivity problem is still not solved.

Edit2: Here is something else I checked (based on this post) is whether the network interface of my virtual machine is still associated to my bridge. To get the name of the interface, i did virsh domiflist LMS (with LMS being the hostname of my vm) and I got this:

Interface Type Source Model MAC

-------------------------------------------------------

vnet0 bridge br0 virtio 52:54:00:f0:0e:f8

It already says br0 there under source, but I'm not sure what exactly that means so I double checked using brctl show br0, which confirmed that vnet0 is associated to br0:

bridge name bridge id STP enabled interfaces

br0 8000.4c5262097e59 yes enp0s31f6

vnet0

I was so hoping to find vnet0 to be missing so that I could fix it, but unfortunately, that was not the problem either.

/etc/network/interfacesas described here: https://askubuntu.com/a/1052023/775670 But I neither understand why it didn't work nor how I could systematically troubleshoot this, so I'm still curious about answers. – Christoph Mar 06 '20 at 22:34