I have a file called "my_file" containing a mix of md5 hashes and file path names. I'd like to be able to identify one duplicate (not sort -u) hash value contained in the first column; also displaying the associated file path in the following column.

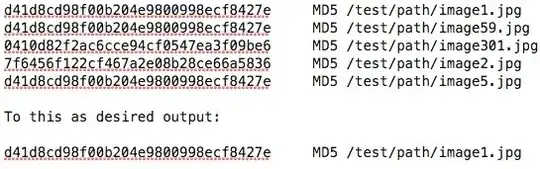

Example: From this # cat my_file

NOTE: The hashes or checksums signify I have a high probability of identifying at the same file

Any help would be appreciated