I have a 120gb SSD which is dedicated to /root and a separate HDD for /home but for some reason my root drive is full and I can't see why.

I've tried autoclean autoremove and clean but it hasn't helped.

I've been having problems with lightdm and spent hours scanning a faulty usb drive with testdisk, its possible some big error logs could have been created, though I don't know where.

Is there a way for me to trouble shoot this?

$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 2.9G 0 2.9G 0% /dev

tmpfs 588M 1.8M 586M 1% /run

/dev/nvme0n1p2 96G 91G 284M 100% /

tmpfs 2.9G 26M 2.9G 1% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 2.9G 0 2.9G 0% /sys/fs/cgroup

/dev/loop1 114M 114M 0 100% /snap/audacity/675

/dev/loop2 157M 157M 0 100% /snap/chromium/1213

/dev/loop4 55M 55M 0 100% /snap/core18/1754

/dev/loop3 97M 97M 0 100% /snap/core/9665

/dev/loop5 97M 97M 0 100% /snap/core/9436

/dev/loop6 159M 159M 0 100% /snap/chromium/1229

/dev/loop7 162M 162M 0 100% /snap/gnome-3-28-1804/128

/dev/loop9 146M 146M 0 100% /snap/firefox/392

/dev/loop10 256M 256M 0 100% /snap/gnome-3-34-1804/36

/dev/loop8 161M 161M 0 100% /snap/gnome-3-28-1804/116

/dev/loop11 145M 145M 0 100% /snap/firefox/387

/dev/loop12 256K 256K 0 100% /snap/gtk2-common-themes/13

/dev/loop0 114M 114M 0 100% /snap/audacity/666

/dev/loop13 256K 256K 0 100% /snap/gtk2-common-themes/9

/dev/loop14 63M 63M 0 100% /snap/gtk-common-themes/1506

/dev/loop15 116M 116M 0 100% /snap/spek/43

/dev/loop16 30M 30M 0 100% /snap/snapd/8140

/dev/nvme0n1p1 188M 7.8M 180M 5% /boot/efi

/dev/loop17 291M 291M 0 100% /snap/vlc/1700

/dev/loop18 55M 55M 0 100% /snap/core18/1880

/dev/loop19 112M 112M 0 100% /snap/simplescreenrecorder-brlin/69

/dev/loop20 30M 30M 0 100% /snap/snapd/8542

/dev/loop21 291M 291M 0 100% /snap/vlc/1620

/dev/sda1 3.4T 490G 2.7T 16% /home

tmpfs 588M 24K 588M 1% /run/user/1000

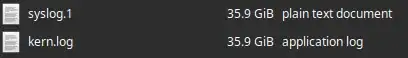

Ok, so syslog.1 and kernlog.1 are both 35.9 each, they probably would have gotten bigger if they could - this caused major problems with my system - lightdm stopped working and a login loop on boot.

EDIT: I need to open these to find out what the cause was, but I suspect they will lock up my PC with the amount of data to open - can anyone confirm this or have any suggestions to see the contents?

EDIT: Cause found, question answered. I think it may be better to ask another question RE: how to read/open the files

EDIT: The cause seems to have been testdisk or the faulty drive. I aborted a deepscan on the drive and unplugged it. The top 20 lines of syslog, thanks to Soren A, are:

Jul 27 14:09:08 ryzen kernel: [19606.795097] sd 10:0:0:0: [sdc] tag#0 device offline or changed

tail -XX syslog.1to wiev the last XX lines of the file (eg. tail -20 syslog.1) or uselessto browse through the file. Both commands will only load a small part of the file into memory. Also something liketail -300 syslog.1 | lesswill let you browse through the last 300 lines on the file. – Soren A Jul 28 '20 at 10:58journaldthey are not needed anymore. – Martin Schröder Jul 30 '20 at 13:11