Background Problems

I have a Dedicated Server (Ubuntu 22.04.1 LTS) with five Public IPs as below:

- xxx.xxx.51.20 (Main Ip)

- xxx.xxx.198.104

- xxx.xxx.198.105

- xxx.xxx.198.106

- xxx.xxx.198.107

I want to hosts several KVM VM inside this server and assign some of the VM a public IP from the host, or simply imagine creating several Virtual Private Servers (VPS) with each having Public IP.

If I am not wrong, I need to create a bridge network. I already did and have br0 bridges with all public IPs assigned there.

Currently, here is the Hosts network configuration:

cat /etc/netplan/50-cloud-init.yaml:

network:

version: 2

renderer: networkd

ethernets:

eno1:

dhcp4: false

dhcp6: false

match:

macaddress: xx:xx:xx:2a:19:d0

set-name: eno1

bridges:

br0:

interfaces: [eno1]

addresses:

- xxx.xxx.51.20/32

- xxx.xxx.198.104/32

- xxx.xxx.198.105/32

- xxx.xxx.198.106/32

- xxx.xxx.198.107/32

routes:

- to: default

via: xxx.xxx.51.1

metric: 100

on-link: true

mtu: 1500

nameservers:

addresses: [8.8.8.8]

parameters:

stp: true

forward-delay: 4

dhcp4: no

dhcp6: no

With this configuration, all of the IP is pointing to the hosts, and can reach the hosts successfully.

Here is the hosts ip a record:

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br0 state UP group default qlen 1000

link/ether xx:xx:xx:2a:19:d0 brd ff:ff:ff:ff:ff:ff

altname enp2s0

3: eno2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether xx:xx:xx:2a:19:d1 brd ff:ff:ff:ff:ff:ff

altname enp3s0

4: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether xx:xx:xx:59:36:78 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.51.20/32 scope global br0

valid_lft forever preferred_lft forever

inet xxx.xxx.198.104/32 scope global br0

valid_lft forever preferred_lft forever

inet xxx.xxx.198.105/32 scope global br0

valid_lft forever preferred_lft forever

inet xxx.xxx.198.106/32 scope global br0

valid_lft forever preferred_lft forever

inet xxx.xxx.198.107/32 scope global br0

valid_lft forever preferred_lft forever

inet6 xxxx::xxxx:xxxx:xxxx:3678/64 scope link

valid_lft forever preferred_lft forever

5: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether xx:xx:xx:e2:3e:ea brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether xx:xx:xx:ff:4c:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

9: vnet2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UNKNOWN group default qlen 1000

link/ether xx:xx:xx:6b:01:05 brd ff:ff:ff:ff:ff:ff

inet6 xxxx::xxxx:ff:fe6b:105/64 scope link

valid_lft forever preferred_lft forever

10: vnet3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master virbr0 state UNKNOWN group default qlen 1000

link/ether xx:xx:xx:16:07:56 brd ff:ff:ff:ff:ff:ff

inet6 xxxx::xxxx:ff:fe16:756/64 scope link

valid_lft forever preferred_lft forever

Then I create a KVM VM with two network attached (one for the br0 and the other is NAT based bridge)

And from the VM, I configure the netplan to be like this:

cat /etc/netplan/00-installer-config.yaml/:

network:

version: 2

ethernets:

enp1s0:

addresses:

- xxx.xxx.198.104/32

routes:

- to: default

via: xxx.xxx.198.1

metric: 100

on-link: true

nameservers:

addresses:

- 1.1.1.1

- 1.1.0.0

- 8.8.8.8

- 8.8.4.4

search: []

enp7s0:

dhcp4: true

dhcp6: true

match:

macaddress: xx:xx:xx:16:07:56

Here the VM use enp1s0 for the static Public IP (xxx.xxx.198.104) and enp7s0 for the NAT from hosts (192.168.122.xxx).

From VM ip a command, it shown that the VM get correct IP.

The problem:

- When I try to ssh directly from my laptop to the VM public IP (xxx.xxx.198.104), it seems that I still connected to the Host, not to the VM.

- From the VM, if I disconnect the NAT network (enp7s0), and only use the (enp1s0) network with public IP, it seems the VM cannot connect to the internet.

Is there something I missed?

Update 1

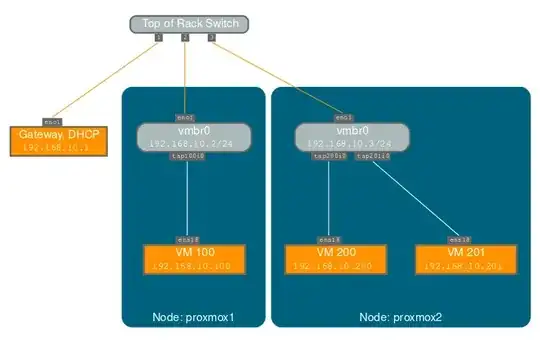

I contacted the DC provider, they have documentation to add Public IP to VM here https://docs.ovh.com/gb/en/dedicated/network-bridging/ but only applicable if I use Proxmox as the host. I need to create a bridge network with the specified Mac Address attached to that bridge, then from VM we need to apply the Public IP.

How to do that on Ubuntu?

Update 2

I managed to create the bridge network with my Public IP on the hosts side with the following command:

sudo ip link add name test-bridge link eth0 type macvlan

sudo ip link set dev test-bridge address MAC_ADDRESS

sudo ip link set test-bridge up

sudo ip addr add ADDITIONAL_IP/32 dev test-bridge

And I repeated it 4 times to add all my public IP to host.

Now my hosts configuration is below:

cat /etc/netplan/50-cloud-init.yaml

network:

version: 2

ethernets:

eno1:

dhcp4: true

match:

macaddress: xx:xx:xx:2a:19:d0

set-name: eno1

And ip a:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether xx:xx:xx:2a:19:d0 brd ff:ff:ff:ff:ff:ff

altname enp2s0

inet xxx.xxx.51.20/24 metric 100 brd xx.xx.51.255 scope global dynamic eno1

valid_lft 84658sec preferred_lft 84658sec

inet6 xxxx::xxxx:xxxx:fe2a:19d0/64 scope link

valid_lft forever preferred_lft forever

3: eno2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether xx:xx:xx:2a:19:d1 brd ff:ff:ff:ff:ff:ff

altname enp3s0

4: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether xx:xx:xx:e2:3e:ea brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether xx:xx:xx:e1:2b:ce brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

6: vmbr1@eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether xx:xx:xx:79:26:12 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.104/32 scope global vmbr1

valid_lft forever preferred_lft forever

inet6 xxxx::xxxx:xxxx:2612/64 scope link

valid_lft forever preferred_lft forever

7: vmbr2@eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether xx:xx:xx:f3:e2:85 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.105/32 scope global vmbr2

valid_lft forever preferred_lft forever

inet6 xxxx::xxxx:xxxx:e285/64 scope link

valid_lft forever preferred_lft forever

8: vmbr3@eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether xx:xx:xx:48:a8:c9 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.106/32 scope global vmbr3

valid_lft forever preferred_lft forever

inet6 xxxx::xxxx:xxxx:a8c9/64 scope link

valid_lft forever preferred_lft forever

9: vmbr4@eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether xx:xx:xx:eb:29:a1 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.107/32 scope global vmbr4

valid_lft forever preferred_lft forever

inet6 xxxx::xxxx:xxxx:29a1/64 scope link

valid_lft forever preferred_lft forever

I tested to ping all the IP Addressed from my laptop and it works.

On the VM side, I edit the networking to be like this:

sudo virsh edit vm_name and look for network interface:

<interface type='network'>

<mac address='xx:xx:xx:16:07:56'/>

<source network='default'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x07' slot='0x00' function='0x0'/>

</interface>

<interface type='bridge'>

<mac address='xx:xx:xx:79:26:12'/>

<source bridge='vmbr1'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</interface>

The problem now, I cannot start the VM:

$ sudo virsh start lab

error: Failed to start domain 'vm_name'

error: Unable to add bridge vmbr1 port vnet8: Operation not supported

Is there something missed again?

Update 3

I found out that using sudo ip link add ... command only works temporary, it will be lost after server reboot.

Please guide me to make the proper Host configuration and VM side configuration.

Thanks

Update 4

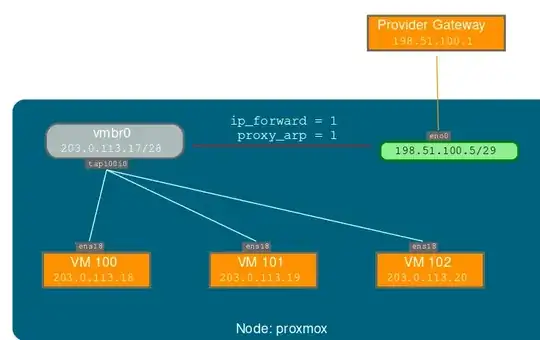

I read Proxmox configuration reference here (https://pve.proxmox.com/wiki/Network_Configuration) and I tried to implement the Routed configuration on the Hosts.

Thus, I installed ifupdown packages, and create configuration below:

auto lo

iface lo inet loopback

auto eno0

iface eno0 inet static

address xxx.xxx.51.20/24

gateway xxx.xxx.51.254

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up echo 1 > /proc/sys/net/ipv4/conf/eno0/proxy_arp

auto vmbr0

iface vmbr0 inet static

address xxx.xxx.198.104/24

bridge-ports none

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address xxx.xxx.198.105/24

bridge-ports none

bridge-stp off

bridge-fd 0

auto vmbr2

iface vmbr2 inet static

address xxx.xxx.198.106/24

bridge-ports none

bridge-stp off

bridge-fd 0

auto vmbr3

iface vmbr3 inet static

address xxx.xxx.198.107/24

bridge-ports none

bridge-stp off

bridge-fd 0

I also disable systemd networking as suggested here (https://askubuntu.com/a/1052023) with the following command:

sudo systemctl unmask networking

sudo systemctl enable networking

sudo systemctl restart networking

sudo journalctl -xeu networking.service

sudo systemctl stop systemd-networkd.socket systemd-networkd networkd-dispatcher systemd-networkd-wait-online

sudo systemctl disable systemd-networkd.socket systemd-networkd networkd-dispatcher systemd-networkd-wait-online

sudo systemctl mask systemd-networkd.socket systemd-networkd networkd-dispatcher systemd-networkd-wait-online

With this configuration, from the VM perspective, it can read that the VM get 2 IP (one private and one public).

However, I got another problem:

sudo systemctl status networkingalways resulted in failed. With log like this:

$ sudo systemctl status networking.service

× networking.service - Raise network interfaces

Loaded: loaded (/lib/systemd/system/networking.service; enabled; vendor preset: enabled)

Active: failed (Result: exit-code) since Tue 2022-12-13 18:51:27 UTC; 7min ago

Docs: man:interfaces(5)

Main PID: 1000 (code=exited, status=1/FAILURE)

CPU: 631ms

Dec 13 18:51:25 hostname ifup[1054]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:25 hostname ifup[1066]: RTNETLINK answers: File exists

Dec 13 18:51:25 hostname ifup[1000]: ifup: failed to bring up eno1

Dec 13 18:51:26 hostname ifup[1132]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:26 hostname ifup[1215]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:27 hostname ifup[1298]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:27 hostname ifup[1381]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:27 hostname systemd[1]: networking.service: Main process exited, code=exited, status=1/FAILURE

Dec 13 18:51:27 hostname systemd[1]: networking.service: Failed with result 'exit-code'.

Dec 13 18:51:27 hostname systemd[1]: Failed to start Raise network interfaces.

and journalctl:

$ sudo journalctl -xeu networking.service

░░ The process' exit code is 'exited' and its exit status is 1.

Dec 13 18:49:08 hostname systemd[1]: networking.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ The unit networking.service has entered the 'failed' state with result 'exit-code'.

Dec 13 18:49:08 hostname systemd[1]: Failed to start Raise network interfaces.

░░ Subject: A start job for unit networking.service has failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit networking.service has finished with a failure.

░░

░░ The job identifier is 3407 and the job result is failed.

-- Boot 50161a44ec43452692ce64fca20cce9d --

Dec 13 18:51:25 hostname systemd[1]: Starting Raise network interfaces...

░░ Subject: A start job for unit networking.service has begun execution

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit networking.service has begun execution.

░░

░░ The job identifier is 49.

Dec 13 18:51:25 hostname ifup[1054]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:25 hostname ifup[1066]: RTNETLINK answers: File exists

Dec 13 18:51:25 hostname ifup[1000]: ifup: failed to bring up eno1

Dec 13 18:51:26 hostname ifup[1132]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:26 hostname ifup[1215]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:27 hostname ifup[1298]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:27 hostname ifup[1381]: /etc/network/if-up.d/resolved: 12: mystatedir: not found

Dec 13 18:51:27 hostname systemd[1]: networking.service: Main process exited, code=exited, status=1/FAILURE

░░ Subject: Unit process exited

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ An ExecStart= process belonging to unit networking.service has exited.

░░

░░ The process' exit code is 'exited' and its exit status is 1.

Dec 13 18:51:27 hostname systemd[1]: networking.service: Failed with result 'exit-code'.

░░ Subject: Unit failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ The unit networking.service has entered the 'failed' state with result 'exit-code'.

Dec 13 18:51:27 hostname systemd[1]: Failed to start Raise network interfaces.

░░ Subject: A start job for unit networking.service has failed

░░ Defined-By: systemd

░░ Support: http://www.ubuntu.com/support

░░

░░ A start job for unit networking.service has finished with a failure.

░░

░░ The job identifier is 49 and the job result is failed.

ip a results on hosts:

$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether xx:xx:xx:2a:19:d0 brd ff:ff:ff:ff:ff:ff

altname enp2s0

inet xxx.xxx.51.20/24 brd xx.xxx.51.255 scope global eno1

valid_lft forever preferred_lft forever

inet6 xxxx::xxxx:xxxx:xxxx:19d0/64 scope link

valid_lft forever preferred_lft forever

3: eno2: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether xx:xx:xx:2a:19:d1 brd ff:ff:ff:ff:ff:ff

altname enp3s0

4: vmbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether xx:xx:xx:b3:96:06 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.104/24 brd xxx.xxx.198.255 scope global vmbr0

valid_lft forever preferred_lft forever

5: vmbr1: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether xx:xx:xx:a8:1a:49 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.105/24 brd xxx.xxx.198.255 scope global vmbr1

valid_lft forever preferred_lft forever

6: vmbr2: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether xx:xx:xx:d8:82:25 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.106/24 brd xxx.xxx.198.255 scope global vmbr2

valid_lft forever preferred_lft forever

7: vmbr3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether xx:xx:xx:4d:aa:31 brd ff:ff:ff:ff:ff:ff

inet xxx.xxx.198.107/24 brd xxx.xxx.198.255 scope global vmbr3

valid_lft forever preferred_lft forever

8: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:e2:3e:ea brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

9: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:8c:f2:63:f5 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

Although the VM can read 2 interfaces (private and public IP), I can't reach the VM public IP from my laptop, it seems on the hosts side, the connection is not forwarded to the VM.

If all above problem is caused by networking service that failing to start, is there any fix for this?

If, netplan / systemd networking is the proper way to configure networking on Ubuntu 22.04, how is the correct netplan configuration for bridging like this case?

$ cat /etc/network/interfaces

auto lo

iface lo inet loopback

auto eno0

iface eno0 inet static

address xxx.xxx.51.20/24

gateway xxx.xxx.51.254

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up echo 1 > /proc/sys/net/ipv4/conf/eno0/proxy_arp

auto vmbr0

iface vmbr0 inet static

address xxx.xxx.198.104/24

bridge-ports none

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address xxx.xxx.198.105/24

bridge-ports none

bridge-stp off

bridge-fd 0

auto vmbr2

iface vmbr2 inet static

address xxx.xxx.198.106/24

bridge-ports none

bridge-stp off

bridge-fd 0

auto vmbr3

iface vmbr3 inet static

address xxx.xxx.198.107/24

bridge-ports none

bridge-stp off

bridge-fd 0