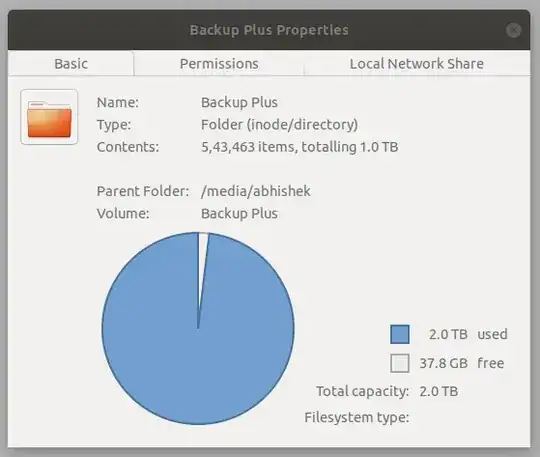

The system that I am using has 18.04 (no dual boot). I have a 2TB Seagate external hard drive which I use for taking the backup of my Ph.D. data. I use rsync -rtvhP to take backups. Recently during one of my backups, rsync failed and I saw that the storage was full, but my data is nowhere near 2TB. I checked the output of df -h and here is the output

Filesystem Size Used Avail Use% Mounted on

.

.

.

/dev/sdb1 1.9T 1.8T 36G 99% /media/abhishek/Backup Plus

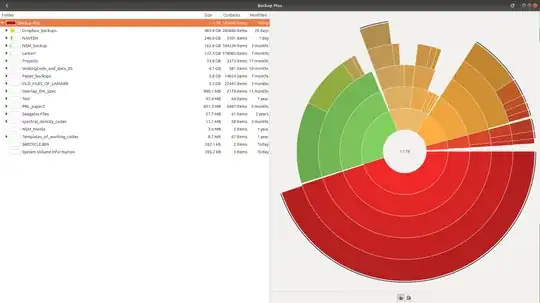

which shows that the drive is almost full. But when I run du -hs to see which directory is taking so much space, I get the following output

451G Dropbox_backups

128G Lamarr

230G NAVEEN

152G NSM_backup

2.9M NSM_Nvidia

3.3G OLD_FILES_OF_LAMARR

849M Overlap_Ent_spec

3.4G Paper_backups

818M PRL_paper2

32G Projects

256K $RECYCLE.BIN

27M Seagates Files

11M spectral_density_codes

384K System Volume Information

8.3M Templates_of_working_codes

93M Test

3.8G WokingCode_and_data_ES

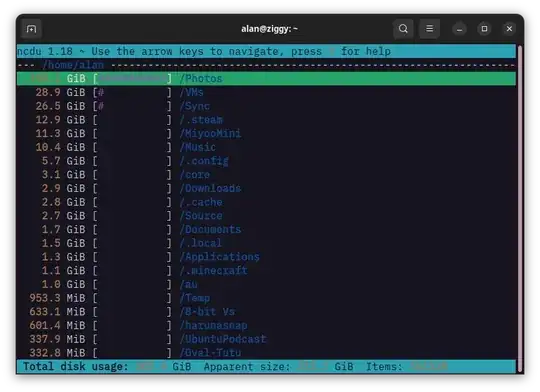

which does not add up to 1.8TB at all. Some people suggested using ncdu which also gives me the same output (shown below)

Results of ncdu :

I understand that df and du are not supposed to show values, but I do not know what is taking up the space. I have seen answers where a similar problem occurs in internal HDD and removing log files has helped, but the drive does not have such log files. I have tried removing the .trash file as well. This is perplexing. I can shift the data to another drive and format it to fix it, but I want to understand what is happening.

Related information:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 1.5M 1 loop /snap/gnome-system-monitor/181

loop1 7:1 0 283.1M 1 loop /snap/brave/202

loop2 7:2 0 38.3M 1 loop /snap/okular/119

loop3 7:3 0 81.3M 1 loop /snap/gtk-common-themes/1534

loop4 7:4 0 49.9M 1 loop /snap/snapd/18357

loop5 7:5 0 2.6M 1 loop /snap/gnome-calculator/920

loop6 7:6 0 556K 1 loop /snap/gnome-logs/112

loop7 7:7 0 219M 1 loop /snap/gnome-3-34-1804/77

loop8 7:8 0 452.4M 1 loop /snap/gnome-42-2204/56

loop9 7:9 0 362.2M 1 loop /snap/telegram-desktop/4593

loop10 7:10 0 63.3M 1 loop /snap/core20/1778

loop11 7:11 0 91.7M 1 loop /snap/gtk-common-themes/1535

loop12 7:12 0 437.2M 1 loop /snap/kde-frameworks-5-98-qt-5-15-6-core20/9

loop13 7:13 0 187.7M 1 loop /snap/okular/115

loop14 7:14 0 22M 1 loop /snap/bashtop/504

loop15 7:15 0 63.3M 1 loop /snap/core20/1822

loop16 7:16 0 9.7M 1 loop /snap/htop/3605

loop17 7:17 0 446.3M 1 loop /snap/gnome-42-2204/44

loop18 7:18 0 49.8M 1 loop /snap/snapd/17950

loop19 7:19 0 22M 1 loop /snap/bashtop/502

loop20 7:20 0 476K 1 loop /snap/gnome-characters/781

loop21 7:21 0 7M 1 loop /snap/tex-match/6

loop22 7:22 0 436.3M 1 loop /snap/kde-frameworks-5-96-qt-5-15-5-core20/7

loop23 7:23 0 346.3M 1 loop /snap/gnome-3-38-2004/119

loop24 7:24 0 72.9M 1 loop /snap/core22/504

loop25 7:25 0 2.6M 1 loop /snap/gnome-system-monitor/178

loop26 7:26 0 219M 1 loop /snap/gnome-3-34-1804/72

loop27 7:27 0 704K 1 loop /snap/gnome-characters/741

loop28 7:28 0 272.4M 1 loop /snap/brave/197

loop29 7:29 0 55.6M 1 loop /snap/core18/2679

loop30 7:30 0 72.9M 1 loop /snap/core22/509

loop31 7:31 0 9.6M 1 loop /snap/htop/3417

loop32 7:32 0 2.5M 1 loop /snap/gnome-calculator/884

loop33 7:33 0 55.6M 1 loop /snap/core18/2667

loop34 7:34 0 696K 1 loop /snap/gnome-logs/115

loop35 7:35 0 362.1M 1 loop /snap/telegram-desktop/4578

loop36 7:36 0 4K 1 loop /snap/bare/5

loop37 7:37 0 323.5M 1 loop /snap/kde-frameworks-5-qt-5-15-core20/14

loop38 7:38 0 346.3M 1 loop /snap/gnome-3-38-2004/115

sda 8:0 0 931.5G 0 disk

├─sda1 8:1 0 14.9G 0 part [SWAP]

├─sda2 8:2 0 139.7G 0 part /

└─sda3 8:3 0 776.9G 0 part /home

sdc 8:32 0 1.8T 0 disk

└─sdc1 8:33 0 1.8T 0 part /media/abhishek/Backup Plus

sr0 11:0 1 1024M 0 rom

- The output of

sudo lsof | grep -c deleted

lsof: WARNING: can't stat() fuse.gvfsd-fuse file system /run/user/1001/gvfs

Output information may be incomplete.

lsof: WARNING: can't stat() fuse file system /run/user/1001/doc

Output information may be incomplete.

4624

- Output of

mount | grep 'media'

/dev/sdc1 on /media/abhishek/Backup Plus type exfat (rw,nosuid,nodev,relatime,uid=1001,gid=1001,fmask=0022,dmask=0022,iocharset=utf8,namecase=0,errors=remount-ro,uhelper=udisks2)