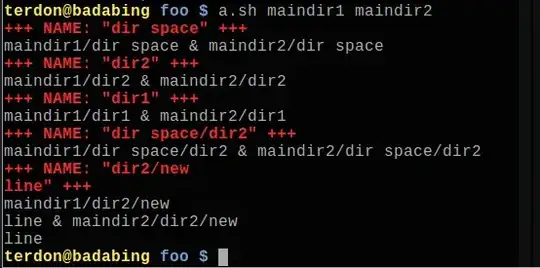

I know about rdfind that can find duplicate files in two directories. But I need a similar utility that finds duplicate folders (folders that have same name and same path relative to main directories) in two main directories. Is there any utility that do this simple task?

**Example:**

$ tree

.

├── maindir1

│ ├── dir space

│ │ ├── dir1

│ │ └── dir2

│ ├── dir1

│ ├── dir2

│ │ └── new\012line

│ ├── dir3

│ │ └── dir5

│ └── dir4

│ └── dir6

├── maindir2

│ ├── dir space

│ │ └── dir2

│ ├── dir1

│ ├── dir2

│ │ └── new\012line

│ ├── dir5

│ │ └── dir6

│ ├── dir6

│ └── new\012line

├── file

└── new\012line

NOTE: In above example the only duplicate folders in first level (depth 1) are:

maindir1/dir space/ & maindir2/dir space/

maindir1/dir1/ & maindir2/dir1/

maindir1/dir2/ & maindir2/dir2/

In second level (depth 2), the only duplicate folders are:

maindir1/dir space/dir2/ & maindir2/dir space/dir2/

maindir1/dir2/new\012line/ & maindir2/dir2/new\012line/

Please note that maindir1/dir3/dir5/ and maindir2/dir5/ are not duplicates and also maindir1/dir4/dir6/ and maindir2/dir5/dir6/ are not duplicates.

rmlint -T dd. – Pablo Bianchi Sep 10 '17 at 20:41